"Agentic systems allow for deeply personalised and interactive experiences"

Inworld's Chris Covert on why AI streaming assistants will transform both game development and player experiences.

Welcome to the latest AI Gamechangers.

Things move fast in the world of AI. Since last week’s newsletter, the online discourse has been dominated by the arrival of the DeepSeek-R1 model, leading to our favourite social media post of the year so far:

Ouch. Everyone we spoke to has been playing around with DeepSeek. And then, boom, Alibaba released Qwen 2.5-Max. But do scroll to the end because we have found some other news people are talking about.

In the meantime, we’re here with another conversation from the frontlines of AI and game development. This week, it’s the turn of Chris Covert, the senior director of product experiences at Inworld.

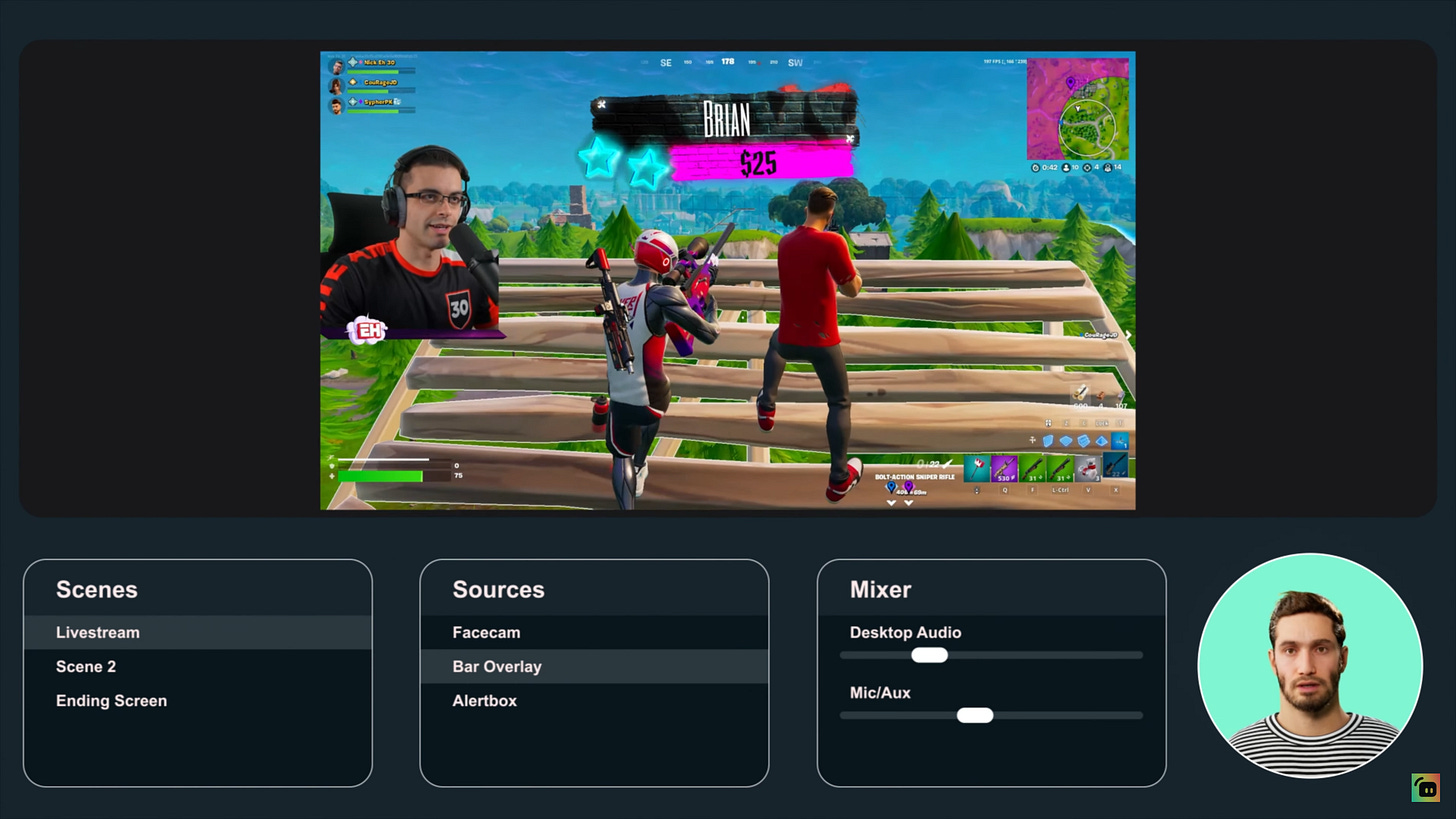

At CES a couple of weeks ago, Inworld, Logitech’s Streamlabs, and NVIDIA unveiled the Intelligent Streaming Assistant, an AI-powered co-host and production tool designed to simplify livestreaming. The partnership made a big splash, so AI Gamechangers set out to learn more.

Chris Covert, Inworld

Meet Chris Covert, the senior director of product experiences at Inworld. He brings significant experience from working at companies like Microsoft, SpaceX, and the Johns Hopkins University Applied Physics Lab. Chris has been at Inworld since January 2024. Founded in 2021, Inworld develops an AI framework for video games, focusing on creating interactive AI agents that can act in real-time environments.

In this interview, we dive into the recently announced collaboration with Streamlabs and NVIDIA on an Intelligent Streaming Assistant and explore broader applications of Inworld’s AI technology.

Top takeaways from this conversation:

Livestreaming is difficult and requires a creator to juggle a lot of things, live. AI can help stay on top of it all.

AI agents in games require a careful balance of personality and guardrails. The focus is on establishing appropriate ethical boundaries while maintaining organic interactions.

The games industry is seeing democratisation through AI tools. Both independent developers and large studios are starting at "square one" with these new technologies, with smaller teams quickly shipping AI-driven games.

Inworld is working on optimising on-device AI processing while balancing cloud capabilities, partnering with hardware companies like NVIDIA to make their technology accessible to millions of players and creators.

AI Gamechangers: Can you tell us what Inworld AI is, please? What’s your role there?

Chris Covert: Inworld is the developer of the leading AI framework for video games, and I am our Sr. Director of Product Experiences here to answer the question: how can we help make fun experiences more accessible to the people who make our favourite games?

Your own background includes significant experience with autonomous systems at organisations like SpaceX and Johns Hopkins APL. How does this influence your approach to developing AI agents at Inworld?

Great question, and one that I haven’t really reflected on fully until now. But both fields require systems that can perceive what’s happening around them, “think” through situations they face along the way, and act on their decisions.

"What I love about AI as a new game development tool is that it's given everyone a new challenge to think about and a new opportunity to make something fun for us gamers"

Chris Covert

That means designing these types of experiences requires thinking not about the process of how something goes from A to B, but rather trying to understand all of the possible paths it could go from A to B and designing a system that makes intuitive decisions along the way. It’s just a new way of designing for non-linear experiences, and I love it.

You've just announced a major collaboration with Streamlabs and NVIDIA on an Intelligent Streaming Assistant. Can you please tell us what it is and what role Inworld AI played in its creation?

The Intelligent Streaming Assistant is an AI agent that serves as an extension of a streamer’s team.

Through just a single agent, the Streamlabs assistant can provide commentary as an interactive co-host and help keep their stream running smoothly as a virtual producer. By hooking into the Streamlabs API, our agent can clip important gameplay, quip the streamer when they goof up an in-game play, nudge the streamer if they missed a donation callout, and so much more.

Even better, is that the streamer will be able to select their assistant’s personality to better match the tone of their stream. Personally, I’d need a calming assistant that reminds me that it’s just a game here and there, but everyone has their own preferences - which makes this a perfect application of AI.

Inworld’s AI platform provides the “brain” of the agent, allowing them to process the streamer’s input - whether that’s through what the streamer says, trends pulled from their chat, or what is happening on-screen - and turn that into an appropriate thing for the assistant to say or do. That perception, cognition, and action are all triggered using Inworld’s AI backend.

What makes livestreaming the perfect field to demonstrate Inworld’s AI capabilities?

Not only has livestreaming never been more popular, but it’s also incredibly hard to do, especially for people doing it completely alone! So, giving streamers (of all experience levels) the opportunity to pull in a multitasking co-host/producer/tech that helps them throughout their stream allows them to focus on what really matters: their content.

Inworld shines in real-time environments. With streaming, you have multiple things happening during the stream: chat, gameplay, commentary, production. Blink and you’ll miss the action. Unlike other agentic frameworks, Inworld is optimised for real-time applications where latency and performance are of paramount importance.

Also, agentic systems allow for deeply personalised and interactive experiences, so one agent can actually help streamers of all kinds in a variety of ways: some streamers will use this assistant to help respond to chat, others to feel like they’ve invited a friend to join their stream and joke through their matches, others simply to more naturally transition between different screen setups - and many will utilise them in a combination of all of these examples and more. That’s really exciting!

Can you tell us a little bit about the tech that’s going into it? “AI” means a lot of different things to different people. Are we talking about an LLM for the co-hosting (you’re using your own foundation model?), agentic AI for the production…? Break it down for us, please.

I may oversimplify things a bit here, but I will break it down into the inputs, the processing loops, and the outputs - all of which use different AI components to run as one fluid system.

It all starts with what we feed the agent with as input. Not only are we using an Automatic Speech Recognition (ASR) model to convert the streamer’s voice into a text transcript for the agent, but we are also pulling in text from chat and using a vision model to digest what is happening on screen in-game.

All of this feeds a reasoning engine at the core of Inworld’s platform, which uses a series of optimised LLM calls of various sizes and models to quickly determine what kind of info it received, what action it should take as a result, and how to communicate that back to the streamer in a way that matches the current tone of the stream.

The output is then processed with Inworld’s text-to-speech model to give our Metahuman avatar a voice, paired with Nvidia’s Audio2Face system to give them expressions as they talk. It sounds simple, but we’re talking about doing all of this in real time with milliseconds of latency.

When put together with Streamlabs’ APIs, we get an assistant that can provide dynamic commentary and trigger mid-stream scene changes at the same time.

How do you balance the need for AI agents to have personality and agency while ensuring they remain within a game’s narrative, artistic and ethical boundaries? Is the application of guardrails something that occupies a lot of your thoughts?

Yes. Almost exclusively. Just like you and me: just because we can say and do things doesn’t always mean we should. It comes down to our understanding of what is appropriate in each situation, and the same is true for agentic systems, but without the right guardrails, the agent has no true definition of what’s appropriate.

"In the last year alone, there have been generational leaps in the quality and efficiency of nearly everything we work with... If you want to design something around AI, aim for the stretch goals, and the industry will make it a reality faster than you think"

Chris Covert

Extending our analogy even further with autonomous vehicles: getting from A to B only works if the passengers are safe, it obeys the rules of the road, and does so in a way that feels organic to the people interacting with it (in and out of the car). So, while we work with agentic systems, it’s the same story: it’s almost more important to consider what guardrails we need to design for so the agent operates smoothly than anything else.

How do you see tools like Inworld AI’s solutions affecting independent creators versus larger studios? What does AI mean for democratising game development and production?

I don’t even have to speculate here: I’ve already seen it. We work with creators of all sizes, and the same rings true for everyone right now: we are all at square one with a new tool that can enable things that were not efficient, effective, or even feasible without AI. We are still just starting to see the first few waves of AI-driven games hit the market, and a majority of them are from smaller teams; teams of only a few developers using AI to help program elements of their game, generate concepts and prototypes, add incredibly novel and interactive mechanics, and ship something in a matter of weeks or months.

But that doesn’t mean that larger studios are doomed to lose this race by any means. Oppositely, many are thinking about AI in the context of how to revive old IPs in new, refreshing ways or integrate it meaningfully into an experience that will touch hundreds of thousands (if not millions) of players around the world.

What I love about AI as a new game development tool is that it’s given everyone a new challenge to think about and a new opportunity to make something fun for us gamers.

One of your stated challenges is scaling to millions of players while ensuring real-time AI responses. How are you approaching the technical hurdle of scaling?

Optimisation is an “evergreen” product track, and one of the most promising ways of delivering AI at scale with the lowest levels of cost and latency is to put as much of it as possible on-device. This is why working with hardware partners like NVIDIA is just as important to us as working directly with studios on new games. If we can get a model to run locally on the device that’s running the game itself, we can bypass the time it takes calls to reach the server - as well as the costs associated with the GPU inference on the cloud - and reach a lot more players faster.

But that also requires us to use smaller, more efficient models that can’t always produce the same quality of the models we can use in the cloud. So, the future of scaling to millions of players is really going to look like a balance of the right models in the right locations for each game. And that’s something at Inworld that we work on every day.

Beyond livestreaming, how do you see the technology behind the Intelligent Streaming Assistant being applied to other areas of the games industry or wide entertainment scene?

Under the hood, the AI components that drive this Streaming Assistant are the same ones that power all of our solutions for agentic experiences.

What do I mean by that? Well, the same systems that provide knowledge, reasoning, and text generation for the Streaming Assistant can be used to create a narrative-grounded storyboard or script generation solution for a studio’s writing team. And the same systems that can detect the streamer’s intent to change up their screen configuration and fire off a trigger to the Streamlabs API can be used to create a boss in a turn-based role-playing game that tries to interpret the motivation of the player and counteract their attacks with a strategy of their own.

"It sounds simple, but we're talking about doing all of this in real time with milliseconds of latency”

Chris Covert

It boils down to the question: what can you do with a system that can “hear”, “think”, “speak”, “see”, and “act” in configurable ways? Well, this is the fun challenge because the areas this can be applied are pretty much everywhere…

After streaming and NPCs, where do you see the most promising applications for AI agents in games over the next few years? Are there areas of AI innovation that are currently underestimated by the industry?

We already see a wave of companies across enterprise solutions thinking about personal assistants. There is a personal assistant for everything from banking to healthcare to smart home monitoring, but these personal assistants aren’t really all that fun (nor are they designed to be). For us in gaming, this translates into the idea of an in-game “companion.”

In a game, we can think of this as a character that accompanies us through our missions, provides in-game help, onboards us via an interactive tutorial, or emulates another player in the game to serve as our ally (or rival).

But outside of a game, these same agents can help us configure our levels, code out new parts of our game, manage live-ops analytics to make sense of player trends as they develop and augment nearly every aspect of game development in a way that complements the creative process end-to-end.

I think the only thing that really is truly underestimated from my point of view is the speed by which we are seeing advancements across the board. In the last year alone, there have been generational leaps in the quality and efficiency of nearly everything we work with. While this is great for the entire industry, it makes designing around the current state of the art a fun trap. If you want to design something around AI, aim for the stretch goals, and the industry will make it a reality faster than you think.

Further down the rabbit hole

Dive into the world of AI and games with a selection of this week’s news.

The AI news cycle was thrown into a frenzy by the launch of DeepSeek last weekend. Not only is its R1 model at least as capable as OpenAI’s o1, but it also runs at just 5% of the cost. And is open source. And cost just $5.6m to train. And you can apparently run it on a local rig built for about $6000. The Chinese service was hit with a large-scale cyberattack but has been available to try at its online chat interface. The DeepSeek chat app became the number one free download on the Apple App Store, overtaking ChatGPT (although it was blocked in Italy). All this triggered a selloff of American AI stocks in what VentureBeat described as a “bloodbath”. Chip manufacturers fared the worst, with semiconductor giant NVIDIA seeing nearly $600 billion wiped off of its market cap by Monday morning. There was chatter that DeepSeek may have been trained on OpenAI models, leading to much laughter about hypocrisy, and perpetually angry tech writer Ed Zitron inviting Sam Altman to “cry more”. Altman seemed to confess in a Reddit Q&A on Friday that OpenAI had been “on the wrong side of history” regarding open source AI. There’ll probably be more fun and games by the time you read this sentence.

Then the AI world spun again when Alibaba dropped its Qwen2.5-Max model, claiming superiority over DeepSeek, Claude and ChatGPT in key benchmarks. You can try it out using its chat interface. Qwen2.5 also boasts VL (vision-language) and 1M (long-context) models too.

So what else is going on? Pocket Worlds acquired Infinite Canvas, an AI-powered UGC development platform with over 100 games to its name, to enhance its flagship platform, Highrise. Highrise has 40 million users and over $250M in revenue.

Faraway Games launched its RIFT platform, a "Shopify app store for AI agents" that enables AI agents to be upgraded with new functionalities like creating NFTs or managing storefronts, with transactions powered by the new RIFT token on Solana and eventually Faraway's blockchain.

AI-powered social games platform Little Umbrella has raised $2 million in seed funding to scale its live ops and launch new titles using its Playroom game kit. This follows the success of its title Death By AI, which attracted 20 million players.

Data guru Sensor Tower has revealed its State Of Mobile 2025 report. The spread of AI is in the number one spot in its Executive Summary. Skip to chapter two to see the discussion of the explosive growth in AI-enabled mobile apps.

Alien Worlds’ Lynx LLM invites users to vote on (and create new) official lore. The game’s universe was partly created by sci-fi scribe Kevin J Anderson, and publisher Dacoco has launched a customised AI that can be quizzed as a virtual librarian on the lore, and generate new ideas.