“Teams will leverage more of their skills now there are automation tools”

Christoffer Holmgård, CEO of modl.ai, reveals how AI bots can speed up QA and bug fixing (and make games more fun).

Welcome to the latest edition of AI Gamechangers, where we take a deep dive into how AI genuinely impacts the video games scene. Forward this to your colleagues and encourage everyone to register for free - you won’t want to miss future editions (we have Q&As with the bosses of award-winners Kinetix, Magicave, Alison.ai, Quicksave Interactive and more already scheduled). This week’s interview is with Christoffer Holmgård of automated testing and bot outfit modl.ai. Scroll down for news on the SAG-AFTRA strikes, a video about the legality of AI in the workplace, and more links.

Christoffer Holmgård, modl.ai

In today’s AI|G feature, meet Christoffer Holmgård, co-founder and CEO of modl.ai. Modl.ai brings AI-driven testing and player simulation to games development. Its AI engine provides virtual players for automated quality assurance and multiplayer bots, enhancing both development processes and player experiences. With a background in indie game development and a PhD in machine learning, Holmgård brings a unique blend of practical and academic expertise to the field. We discuss AI in gaming, the future of QA testing, and how AI can complement rather than replace human roles.

Top takeaways in this interview:

Holmgård’s team provides AI-powered virtual players that serve two main business functions: automated QA testing to streamline game development cycles, and multiplayer game bots that can provide human-like opponents.

The QA automation approach combines multiple AI models. There are self-learning bots that build game representation from scratch, and behavior models trained on human player data (typically 10-40 hours of gameplay).

The modl.ai team believes AI tools like this are complementary to the human QA process and will enable QA departments to quickly focus on analysis and feedback.

For the multiplayer bot implementations, they prioritise cost-efficient deployment suitable for server-side scaling, human-like behavior (including quirks), and multiple skill levels to match different player abilities.

AI Gamechangers: Please give us an overview of what modl.ai does.

Christoffer Holmgård: We’re building a behavioural AI engine for video game development pipelines and for games themselves. What a “behavioural AI engine” really means is that we provide virtual players for games, or bots, and then we work to find the places that make a real, meaningful difference to developers in how they build games.

We’ve found two places where we can really make a difference for developers. One is using these virtual players for automating aspects of quality assurance. The other part is serving them up for multiplayer games so that you may play against them.

Last year, we brought out our Unity solution for automated QA and made some multiplayer bot projects. Then, at Gamescom, we announced Unreal solutions and support for Unreal. The time since we were at GDC 2023 has been spent working with games studios and expanding along those lines. [We have been] going to market, getting the solution out, and working with developers, which has been a big focus area for us.

What’s your own personal relationship with AI? Are you a programmer by profession?

I’ve been making games since 2008. I came out of the indie scene, shipped titles across a bunch of platforms, and then, around 2011, I got involved with AI machine learning research. I ended up doing a PhD in that! I come at it very much from the technical side of things, but I did my undergraduate studies in psychology.

That process of being in the indie space, and in the academic space, that’s where all the founders behind modl.ai met through the years. We didn’t start the company until 2018 because we didn’t think the technology was ready. Over the years, we’ve tried to be very forward-thinking but also pragmatic in terms of what you can do with AI that would make a difference to the game production process. Is something just cool or interesting? No, it has to be functional and useful! What do you actually need when you’re building video games? What would move the needle in the production process?

What we’re doing is simulating players, and that is informed by our backgrounds. It brings those three things together: how do you work with behavioural data, decision-making, and actions that people are taking?

What does modl.ai’s AI bring to the QA scene? What problem do you solve?

At a high level, we’re working to address the fact that game development processes are still very much stop-and-go; the way testing fits into that is part of the issue. You can look across the industry at game launches in recent years and see developers’ frustrations, the waste or risk, and the things that slow down game production – they come from the fact that there are many disciplines involved. There is a lot of handover happening.

We think that one of the things that you could do to make that better would be to have the testing process be a more integrated part of how you work. That’s how it’s done with other kinds of software, and has been done for many years: it automatically gets built, it gets tested, you get reports back, and then human QA comes in and says, “What are we going to do about this?” The fact is that games have been so hard to operate using software that you couldn’t really automate the testing part to any large extent. It’s such a rich experience; it’s so hard to operate a game.

That’s what we do. With AI, we try to automate parts that haven’t been automatable before so that the QA people (who would normally have to spend most of their time just figuring out what to do next) can jump right to that analysis step and start helping the teams make decisions. For me, it’s a story of tightening development cycles and making game development easier and more efficient.

QA is a communication profession. I think teams will upskill and leverage more of the skills they already have – and have a greater impact in the industry – now there are automation tools.

Christoffer Holmgård

People are afraid that AI will replace human jobs. Does your technology complement the process, or are we seeing the end of human QA testers?

We very much see it as complementary.

I had the benefit of being at Game Quality Forum about a month ago in Amsterdam with hundreds of QA folk. What really, really shone through was that it’s a profession fundamentally oriented towards making organisations run smoother and be more effective. QA is a communication profession. It’s just that you have to do all of these manual operations to figure out what’s relevant information for the rest of the team.

I mention that because I think QA teams will upskill and actually leverage more of the skills that they already have – and have a greater impact in the industry – now there are automation tools. Does that mean the nature of the work changes? I would say, yes, probably. But I would say not for the worse.

Would it mean that fewer people are involved? That remains to be seen. But I hope that because the value addition multiplies, you’d want to keep the same kind of engagement there.

Games continue to get bigger and more complicated. So it’s almost like an arms race for QA testers. Do you imagine there’ll always be more and more for them to do?

Yes. More efficient QA and better leveraging of QA resources might be a secret weapon for competing overall in the games market. If you listen to the really big companies, the triple-As, a lot of them are communicating about that now. Quality is going to be a factor in competition. I think the only way to drive that is by having more automation and being more efficient around it.

Without giving away your secrets, please tell us what data the testing bots are built on. Have you spent years analysing how people navigate level maps and similar things?

I sometimes call us a “kitchen sink” AI company because we’re very eclectic regarding what we source to solve the problem. We have multiple AI models and systems underlying the testing bots, depending on the problem you’re trying to solve. We don’t think there’s a one-size-fits-all [solution], at least not yet.

We have bots that we can run in your game that have no a priori models but build a representation of the game as it’s playing it; give it a brand new level, and it knows nothing, but it figures it out as it works through it.

We have other models that are based on actual human player data. So, we collect data from somebody playing the game; typically, we need at least 10 hours of somebody playing. We think 40 hours is a good number. Then you model over that, and then [the bots] start being able to execute the game like a human player would. That’s not always what you want for testing purposes because sometimes you want something that doesn’t play like somebody who’s playing for fun, but somebody who’s playing to test.

More recently, we’ve started looking into using large foundational models, actually visual LLMs, that can look at the screen and tell you what’s going on. If you combine that with some of the other methods, they can start informing the team. So, you get different levels of decision-making.

We have some methods which just work by the pure logic of it, like what you’d call old-fashioned AI. And we have some based on people actually interacting with and playing the particular game. And now we are adding in these large models that can help provide more context on what’s happening in the game.

For testing purposes, you don’t necessarily want the bot to behave like a player. You almost want to push it to breaking point and see the leaks in the level...

[We have a bot] that shows up in the game and knows nothing, but over time, it’s building an internal map of the game geometry and the different game states. It also knows what buttons to push. Let this run for six to 10 hours, or even just a few minutes, depending on the size of the level. If there was a hole in the level, it would fall through and identify that, then you could use that data.

That approach is not limited to just trying to break your game inside the 3D or 2D levels. You can also use it for things like graphical user interface testing habits. We use this system to test a narrative game, where you can think of the bot walking through all the different storylines in the narrative game to make sure they all work and that there are no dead ends. You can imagine someone would have had to spend their whole day doing this to cover this game. Instead, you can just have this go through it over the weekend; the QA person comes back on Monday and can see what broke and what didn’t break.

Alternatively, we can train them on human behaviour. We can put four of them in a game, and they can play against each other. This is good for other kinds of testing and making sense of the game. Or we can even serve that up with a game, as a bot if you’re playing a multiplayer game.

We collected data at a LAN party where people were just having fun. Once in a while, these bots will start throwing grenades up into the air. Because that was a behaviour that became a thing at that event! You get those idiosyncrasies.

Christoffer Holmgård

How do the models differ in QA testing compared to multiplayer game bots?

With the multiplayer bots, they have to be much more attuned to the player experience. For lack of a better word, they need to be more ‘human-like’.

The paradigm we follow for the testing bots is that we imagine the AI living outside of your game, but it’s connected into the game through a hook. And that allows us to flexibly change out the different agents or the different models that you can run inside of the game, for other purposes. But it’s not as efficient as if you took that AI model and put it inside the game. You have to think, for multiplayer games, that whenever you’re running one of these bots up there, they have to run on a server somewhere – that will incur costs in terms of runtime, especially if you’re a free-to-play multiplayer game. Even if you’re a classic premium game, the cost of running that AI goes somewhere! Its performance has to be good to make sense from a business side of things, but it also has to be feasible to do at scale. So we’ve developed expertise in both directions.

The other thing you must think about when making multiplayer bots is that you probably don’t want just one! An approach we take with them is splitting them into different skill levels so that they’re appropriate for different players.

Those are the two main differences: how do we deploy with the customer? And how deep do we go in, in order to make sure that the player experience is right? The underlying approaches are shared, but diverge at the deployment stage.

How do you balance the skill level? Because a well-trained bot could conceivably be the best player in that level, and that’s not fun for the players.

That’s right. That’s why our primary way of developing the bots is learning directly from what human players did in the game. You get the idiosyncratic behaviour of human players. So you don’t get this alien AI that has been trained to be the optimal player in the world. No, it will replicate all the weird things that human players do when they play.

For instance, we collected data at a LAN party where people were just having fun, not playing competitively. Once in a while, these bots – when they get a kill – will start throwing grenades up into the air. Because that was a behaviour that became a thing at that event where we collected the data! You get those idiosyncrasies. You want them there if you want it to be a fun experience.

What are you working on next?

We’re always iterating on the core products. I couldn’t give you a launch date for this, but there are two things we’re excited about.

Firstly, we know integrating into games is always the main thing that takes time for developers when they want to use our products. The less the game developer has to integrate through testing purposes, the better off they are. After all, they are out to test their game; they’re not interested in putting a lot of software development effort into achieving that. The less maintenance they have, the better. We’ve been working on taking games and then learning to play them without an integration. What if we could take the same things that we usually hook into the game to play, but could learn to play it just from the screen, maybe from recordings of how people played the game? [We have a] prototype, where we grab the game from the app store. We don’t have the source code, we don’t have anything like that, but we built a system that observes what’s on the screen and measures what players were doing, in order to learn. That’s also where some of these large language models can come in and help make that more generalisable.

Secondly, what do you do to make the QA person’s job easier when consuming that information? We always video record everything that happens when running a test for a customer. We’ve been working on taking the videos from testing the games and finding the interesting bits. Imagine you see an image with a missing facial texture, and when you send that off to an AI model, you can ask the AI model to assess what’s wrong in this image, what’s going on, and what we should do about it. It produces a bug report on the other side. A human QA tester would have to play the game, identify this, write it down, and then work can start. What we’re looking to do is that once the automated gameplay has happened, the analysis is automated, and it’s handed off to the human user to decide what should happen next. What we’re looking to do is just make people’s lives easier with this kind of tech.

For [generating the report], we’re using an existing LLM, and then we have our own context, prompting, and examples in between.

The overall vision for modl.ai is to keep building this AI engine to the point where it eventually becomes like a drop-in or add-on system to any game. Once you have these AI players available to operate a game, we build out this whole ecosystem of services around it to make game developers’ lives easier and players’ experiences better. It’s about building up this stack of intelligence until we can support as many games as possible, and then having the right support services around it so that it’s useful. And that’s our ambition: to be the industry’s go-to behavioural AI engine. We think this will be a very important ‘module’ in the process of game development, one we’d like to make widely available to devs. If you don’t have an R&D AI research team, you can still leverage some of these benefits.

Be mindful of where the data comes from. Check if you feel you can ethically and responsibly use the solutions from a vendor from an integrity point of view.

Christoffer Holmgård

Do you take an interest in ongoing conversations around AI legislation, data, privacy and other legal factors?

We track it continuously. It hasn’t had a big impact on the way we operate or our business model. We’re a business-to-business tool; we’re a tool for developers. We’re interested in what people do in the games, and we’re interested in their assessments, but we don’t need to know who you are. We’re not an advertising company!

To the extent that we use LLMs, we’re also not replicating other people’s creative work. We go about it in a very instructional, agentic way. But we have to be aware of it, and I’m glad we have a core stack that isn’t 100% LLM-dependent. We’re not just a wrapper around an LLM; we have a core part that is completely aside from that discussion, and then we’re just trying to leverage the foundational models the best way we can.

You have a background in indie games. What advice do you have for developers who are starting with AI technology like this today?

Definitely explore and try it out. Gain a little bit of experience before you start building your whole workflow around it. I come from the pragmatic side of things, so I say investigate. You’ve got to figure out what’s right for your game production flow.

Of course, also be mindful of where the data comes from. Check if you feel you can ethically and responsibly use the solutions from a vendor from an integrity point of view.

At the end of the day, small and medium-sized game productions stand to benefit more from many of these coming tools. They will help them keep competing with larger titles. Across the industry, we’ll see folks looking into and benefiting from it.

Further down the rabbit hole

Some useful news, views and links to keep you going until next time…

India's games and esports company Nazara plans to partner with the government of Telangana, a state in south-central India, to launch an AI centre of excellence focusing on games and digital entertainment.

Project Makina, a suite of AI-powered development tools by Jabali, has entered closed beta. The platform enables users to quickly generate fully functioning games across over 10 genres using natural language inputs.

Coinbase Developer Platform witnessed the first AI-to-AI cryptocurrency transaction last week, where one AI purchased tokens from another using the digital stablecoin USDC. A significant step towards enabling AI agents to access paid services or resources online?

While SAG-AFTRA continues its strike against major game developers, begun in July 2024, it has successfully negotiated agreements with 80 games companies that include AI protections for voice actors and performers. These “tiered-budget or interim agreements” provide “common sense AI protections” against unregulated AI use of actors' performances in games.

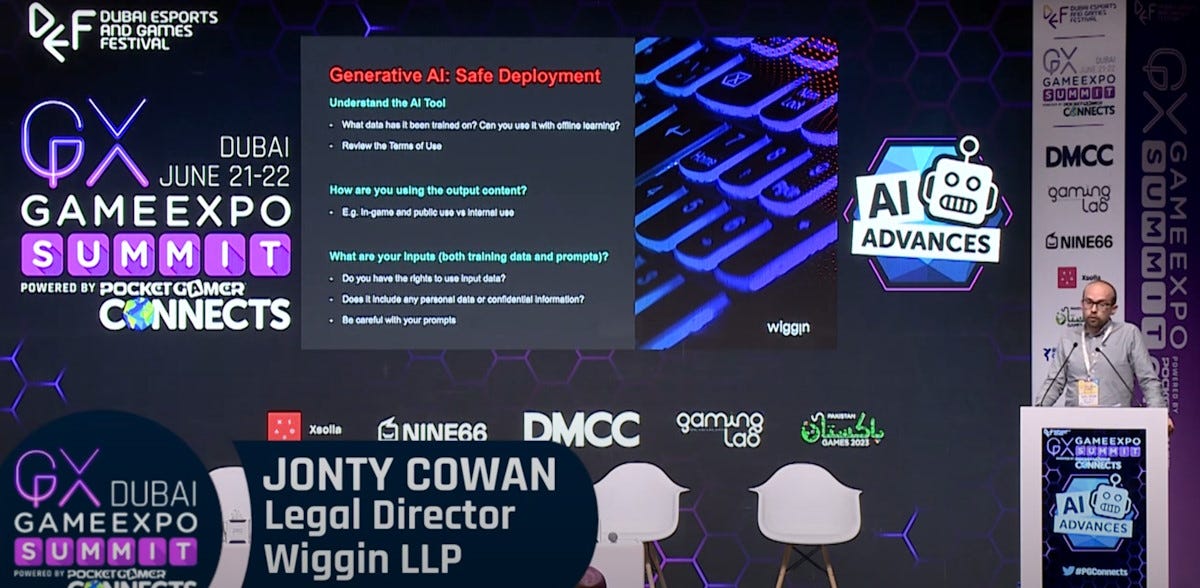

This video is a few months old, but it’s still worth a watch. Legal director Jonty Cowan of Wiggin LLP gives practical advice on what to consider if you’re going to use generative AI as a company. “Generative AI: Maximising Deployment Whilst Minimising Legal Risks” from the GameExpo Summit.