"It’s UGC: enabling people to do things they couldn’t do before with AI"

Yassine Tahi of Kinetix explains how AI transforms videos into 3D emotes, so players can express themselves.

Welcome to the new edition of AI Gamechangers. Each week, we interview a leader in the games industry to discuss how AI plays a role in their field. Forward this to your colleagues and encourage them to register for free. We already have Q&As with Alison.ai, Quicksave Interactive and more ready to roll out this month. This week’s interview is with Yassine Tahi of award-winning emotes outfit Kinetix.

Scroll to the end for more news and links, including the results of the first International AI Olympiad and a new consortium working with AI storytelling in film and TV.

Yassine Tahi, Kinetix

In this week’s AI|G feature, meet Yassine Tahi, the co-founder and CEO of Kinetix. His team specialises in AI-animated avatars and user-generated content for virtual worlds. Kinetix recently topped the Best AI Games Tech category at the Mobile Games Awards and this month announced a $1 million fund to accelerate AI-powered user-generated content in games. Tahi has led Kinetix since 2020, focusing on transforming 2D videos into 3D animations. Today, he tells us about enabling players to customise their own emotes, representing a significant shift towards democratising content creation in games.

Key takeaways from this great Q&A:

User-generated content is a significant trend, and AI will help. Kinetix’s technology enables players to turn 2D videos into 3D emotes, enabling deeper self-expression in games.

AI can broaden game development, making it possible for billions of players – not just professionals – to create, share, and monetise their own content within virtual worlds.

An ethical approach to AI training data is essential. Kinetix places a strong emphasis on ethical data collection by collaborating with artists and creators to build diverse datasets, prioritising inclusivity.

AI Gamechangers: Please tell us about your background. How did you get where you are now?

Yassine Tahi: We started the company in 2020, so we were one of the first AI companies in the creativity space in that period.

In the beginning, we started the company seeing the rise of AI research papers and more and more research done around human body motion. We started from the tech side because my co-founder and CTO was doing research in AI. I came from more of a VC background, and I knew that gaming was something that VC started to look at because the market was big and growing very fast.

I thought, let’s call some studios and start asking them questions about how AI can accelerate their workflows. It was during Covid, so everyone had a lot of time to answer my questions! We had a lot of talks with studios, and we saw a big opportunity at the time. Ubisoft was looking at it, and there was a lot of interest. So, we raised some funds and started working on our model. After a year and a half, we had 50,000 creators on the platform using it and giving us feedback, and we realised that the AI technology for 3D animation, for avatar animation, was not mature enough to be a game changer at the time. This was at the end of 2021.

“If you look at the state of the art today, animation is the last one. You have text, image, video, and then 3D and then animated 3D. It's the most difficult part. There is no motion data to speak of.”

Yassine Tahi

At the same time, we saw a lot of gamers and TikTokers coming to our platform, creating emotes and animations and asking us how to use it. We saw the rise of virtual worlds; the metaverse was a big topic. So we started exploring virtual worlds. Big games have economies inside of them, and we realised that emotes is one of the big revenues for them. It represents between 20%-35% depending on the platform where the in-game assets are sold to the users.

We started doing some research, and we found that while skins are obviously the thing that everyone sees and talks about because they are very visible, emotes are the second biggest category. People are willing to buy and use emotes. However, the animation is super complex, so people cannot access it. So by offering a way for people to customise their emotes, it was something completely new for the users. At the same time, it was something they were very excited about because it was something they couldn’t do before. Animation is self-expression. It’s interaction with other people.

It was a big opportunity for us, and we decided to completely pivot. We raised money, and we decided not to target the creators any more, animators or professionals, but to target people who couldn’t do animation before – the gamers.

What platforms and environments do you work in? Where can we see your emotes today?

We had some smaller games using our technology to start and iterate with, but our first big title, Overdare, launched today. It’s the new game from Krafton, a multiplayer social game.

We have a community of modders that started integrating our technology into GTA with great metrics, and we’re also finalising the integration with a fighting game where you can play emotes while you’re fighting with other people. You can customise the emote that you use to brag whilst you’re fighting with other people.

Mostly our in-game technology is used for socialisation and for expression. It’s also for people to create videos and share them on social media. We have some good use cases of creators creating emotes and sharing them on TikTok and having millions of views! People are definitely interested in customising their avatars, customising how they express themselves with the avatar – and they’re ready to share and pay for that.

Without giving away your secret sauce, how do you leverage AI in the creation of these emotes?

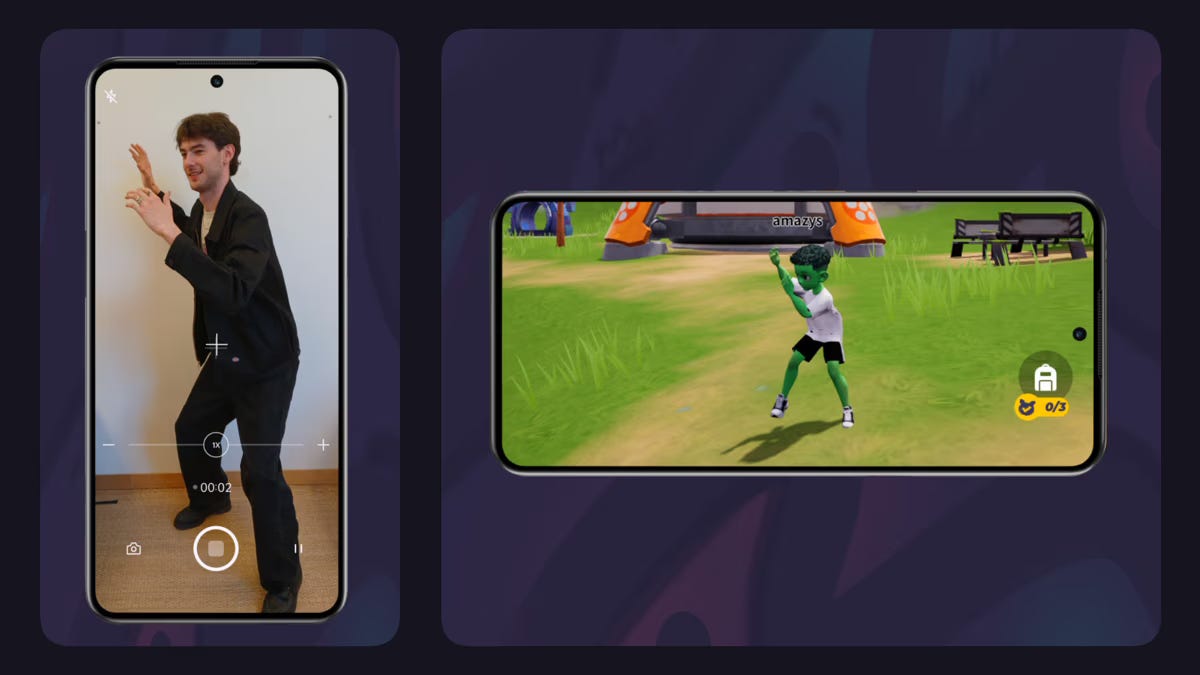

We are an AI company, and R&D is more than a third of the company today in terms of people. We invest a lot in our core algorithm. The core algorithm of Kinetix, our AI, takes a video (any video from the internet or film yourself) and is able to transform this video into a 3D animation. We extract information from any 2D video, transform it into 3D, and then use this 3D animation on any kind of avatar.

We have multiple layers of AI generation, from video but also from text. We are also starting to do some text to motion, and transferring animation from one avatar to another, which is a bit of a complex topic because every avatar has a different body morphology. When you change morphology, you lose quality. So, we’ve been investigating how to transfer an animation from one avatar to another.

Kinetix has raised some funding over its journey. How are you investing your resources to further your mission?

First, our core algorithm. It’s multi-model: video, text to avatar animation is our main focus. If you look at the state of the art of the research today, animation is the last one. You have text, image, video, and then 3D and then animated 3D. It’s the most difficult part. It’s technically complex. And there is no data. There is no motion data to speak of. There are a few hours of motion data on the internet, but compared to video, text and images, nothing. So, we create our own data sets. We are building the biggest library of motion in the world, working with hundreds of artists, dancers, and other people creating movements. That’s our main focus.

And then training our own algorithms and fine-tuning them, perfecting them, so that any video that you can input will work, and any avatar that you input will work. That’s the core mission of our investment. It’s the core of our company.

We distribute them to gamers, and for that, we need to have partnerships with big studios and integrate the technology. We had to create a whole SDK and integration layer to be able to address big studios. You need to be able to sustain millions of users. We had to build an infrastructure in the cloud because we’re in charge of streaming the assets in the game and distributing them into the game.

“UGC is pivotal because we're not talking about 20 or 30 million game developers. We're talking about the 100 million or maybe even billions of players that could become participants and use AI as part of their journey.”

Yassine Tahi

It’s been a two-year journey. It’s basically like Ready Player Me for emotes. We built a whole infrastructure to serve these games. Now it’s ready, and it has been tested and validated. We passed the test for the alpha version of the Krafton game. Now, we’re heading to our global release, which is a great achievement.

One of the big topics at the moment is the ethics of training data and where that data comes from – including biases and privacy. How is Kinetix staying mindful of those topics as you do your training?

That’s a pretty easy topic for us because most other people who are training are using existing data sets. It’s a bigger problem for them because they’re usually without the rights.

We have to create those data sets, and we’re working with artists and dancers. On the contracts that we are signing with them, we’re making sure they’re aware of what we are doing. We’re paying them to do that. We’re doing it in partnership with them rather than taking their data without them knowing. That’s the first step.

The other thing is bias and diversity, and that’s a very important topic for us. Myself, being LGBTQ+, I’m in the community, and we have also one of the biggest LGBTQ+ firms behind us. We had some involvement in making sure that our data sets are not biased. So, even in the training, we make sure that we have guys, girls, and people who are nonbinary and have a wide range of body sizes and body shapes. It’s at the core of our company’s values. But it also serves our business purpose because our users can be of any age and shape. We’re about self-expression. We need to enable people to express how they truly are. If our AI isn’t able to recognise someone or not able to transform someone because they’re Black or because they’re a girl, it will be a problem for us. We’re doing it for our values but also because it’s serving our business purposes, and we need to address the population and make sure that our algorithm is working for everyone.

That’s our commitment on those topics. It’s a blessing and a curse because we have to create everything from scratch, but at the same time, it protects us because we are doing it in the way we want to do it.

Please tell us a little bit more about your work with Overdare from Krafton. What will the user’s experience be like?

You have played MMOs and multiplayer games: when you start the game, you customise your avatar. You spend time shaping how their face and body will be, and so on. The next step, usually, is that you start the game.

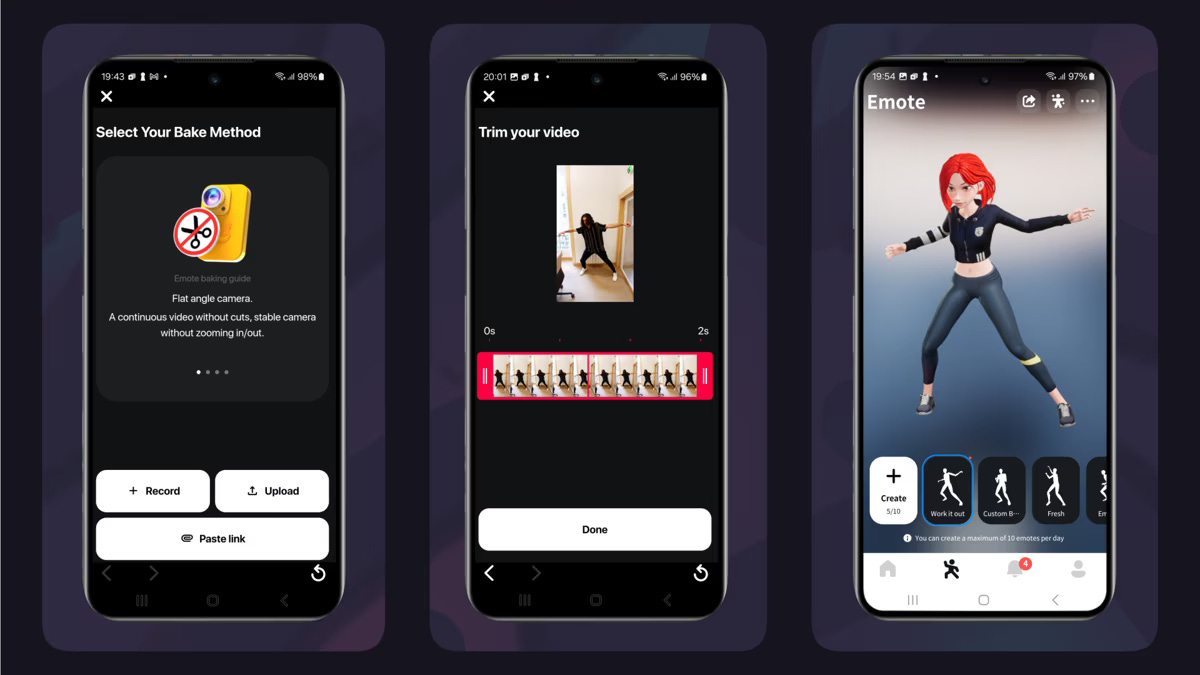

Now, the next step will be that you can also customise your moves in the game! You have multiple slots. Every day, you have new slots that you can fill. You click a “plus” button. It opens up your camera, or you can input a video from your library, social media, or wherever you want to bring it from, and we’re able to extract the motion, and then you can save it in your movements. You can give it a name, like “hello” or “excited”, and you can customise your own expressions and your own behaviours in the game. Then, once it’s saved, you can play them with your avatar whenever you want.

Where do you think AI and machine learning will have the most impact on games in the next few years?

It depends on what kind of value we’re talking about. Let’s simplify the question and take its monetary value. For me, there are three buckets where AI can change the creation and gaming space.

The first bucket is accelerating the workflows of studios and making sure that creation is faster. That’s the obvious one. Let’s say 99% of the companies that you see are working on one kind of asset: lip sync, voice, 3D assets, code. So that’s the first bucket – accelerating all that. For me, it’s the least of them because it’s an incremental change.

The second bucket is where we are: it’s UGC, enabling people to do things they couldn’t do before with AI and implementing that in your game. It’s enabling people to create their own emotes, customise their own avatars, create their own virtual worlds, and build things in games. That is very pivotal because we’re not talking about 20 or 30 million game developers that are using Unity or Unreal. We’re talking about the 100 million or maybe even billions of players that could become participants and use AI as part of their journey. The leverage there is an order of magnitude greater, like comparing video editing tools from 20 years ago with TikTok. Now, everyone can create a video through their phone; everyone is participating in creation. Every game can be like Minecraft or even Roblox.

The third bucket, which could be even bigger than the two, is AI-powered games. Every time there are new technologies, new gameplays are created. Maybe there will be a new type of gameplay that comes with an AI agent or AI creativity. AI can be the core game loop of the game. And games can generate tens of billions in revenue, so maybe the next Fortnite will have an AI game loop inside of it.

With AI technology moving so rapidly, how do you ensure that your team stays at the forefront of innovation?

If AI is a tool, then you need to think about use cases, having a business model, having a distribution. What we have been focusing on for the past two years is building this distribution once we have good enough technology. I think that our core value is generating 3D animation, which is very hard to disrupt because there is no model that can come and just create super cool 3D animation that can be game-ready.

In video and animation, maybe there will be a big jump in technology, but in 3D animation, I doubt that there will be some new model that just comes along because the data, as I said, is not there. Building the data will take a lot of time for the other people in the market. I think we’re the people who have raised the most. We’re investing a lot in animation.

If you want to access players, you need to integrate with games where the players are. Integrating with games is a long journey because it’s super difficult to work with big games to bring tools that fit their workflows and correctly answer all their questions about scalability, performance, and moderation. It takes years to build these layers. I don’t think you can just one day say, “Okay, I’m going to integrate with Fortnite!” and do that with Fortnite. Games are well-guarded by the studios.

Maybe the biggest companies have studios that are doing the same as us, but that’s a different story. But from a startup perspective, there can be some innovation, but then you need to take it to the market. We’re creating partnerships and long-term collaborations.

You mentioned the infrastructure you’ve had to build because you’re supplying the emotes to the games. Running AI and machine learning models takes a huge amount of resources, too. Can you speak a little more about the challenge there?

The challenge is: how can we generate an animation and then stream it? It’s stored in the cloud once it’s generated, and you have to stream it to people all around the world in the game directly in runtime. That’s a big challenge.

We have a team of six people who have been working on it for the past two years. Six people in our company are just on the back-end side of things. It takes a lot of resources to maintain and serve that. But without it, you can’t say to a big game that you’re going to serve it. If you’re not able to ensure the service, it’s very hard for a game to adopt this kind of technology. You need to prove to them that there is value for their users, and for the moment, it’s still the beginning.

“Bias and diversity is a very important topic for us. It’s at the core of our company’s values. But it also serves our business purpose because our users can be of any age and shape. We’re about self-expression. We need to enable people to express how they truly are.”

Yassine Tahi

Very few games are accepting AI technologies in their games. Overdare is the first time users are able to create with AI in a game and use their assets in 3D in the game. Proving you can bring that and make it easy for them to integrate is the big challenge because games have tons of things to do to build, so they don’t have time.

We had to build all this so that we could say to the studios, “Look, it will take two or three days to integrate. You don’t have to worry about all the back-end infrastructure. You just put in this feature, and your user will be able to use it, and we run the thing for you. For you, it’s a monetisation tool. You can sell it to your users. They can create with it. They can share it on social media. It will bring you new users. So it increases your monetisation, it increases your user acquisition, without you having to invest in the infrastructure for that.”

That’s what our strategy is: to say it’s all easy and quick, and we bring you new AI tools for your users. We’re very different from [others] in the market because when we talk to them, they think we’re going to sell them animation tools for their studio! And we’re like, “No, no, we don’t want to talk to your animation teams. We want to talk with your product teams. We want to talk with your game designers!”

You recently announced a $1 million fund initiative to accelerate AI-UGC in games. How do you envision this fund changing the landscape of user-generated content in games?

By incentivising game developers to integrate AI-driven UGC tools, we are paving the way for a more democratised gaming ecosystem in the coming years. Players will no longer be mere consumers; they will become active contributors to the evolution of game worlds. This shift will result in richer, more personalised gaming experiences and open up new avenues for community engagement and monetisation for game developers.

Can you share any insights on the criteria you’ll be using to select developers for the fund?

We’re looking for developers who not only have a clear vision for advancing the user-generated content ecosystem but also meet some key criteria that align with our goals. Ideally, candidates should have a 3D avatar system already in place and be at a stage where they are live, in open or closed beta, or soon to be.

We’re also focusing on projects that demonstrate a growing user base, particularly in terms of monthly active users (MAUs); we are talking about 50K+ MAUs with demonstrated growth. Additionally, we’re interested in developers who are already monetising in-game assets or are eager to start exploring monetisation avenues. By selecting teams that meet these criteria, we aim to support the creation of robust, scalable, and monetisable UGC ecosystems within games.

Further down the rabbit hole

Some useful news, views and links to keep you going until next time…

Electronic Arts reveals experimental Project Air at its Investor Day and states AI "is not merely a buzzword for us". CEO Andrew Wilson claims EA has more than 100 active AI projects.

Last week, the Global AI Summit (GAIN) took place in Riyadh, KSA, hosting the first International Artificial Intelligence Olympiad, where 25 countries competed to solve technical questions and problem-solving challenges. The winner was Brest Lenarčič from Slovenia.

“Practical AI” is one of 21 topic tracks at PG Connects Helsinki on 1-2 October. That’s just a few days away, but tickets are still available.

The new Charismatic consortium has secured £1.04 million in funding from Innovate UK. It aims to explore AI-driven storytelling opportunities for the TV and film industries, especially with under-represented creators in mind. The CEO of Charismatic.ai is Guy Gadney, and the consortium includes Channel 4, Falmouth University, Aardman Animations and more.