"We’re building real-time 3D TV shows that talk back"

Luc Schurgers, CTO of Replikant, on AI characters, 3D animation pipelines, and the future of real-time storytelling.

Hello again! Welcome back to AI Gamechangers, the newsletter from the makers of PocketGamer.biz, where we speak to creators reshaping the games industry through practical applications of AI. Every week, we bring you insights from the sharpest minds on the frontier of tech and creativity.

Today, we chat with Luc Schurgers, a creative technologist and co-founder of Replikant, a real-time 3D platform that enables users to generate animated AI characters and have conversations with them. We explore what it means to turn chatbots into interactive sitcoms, how to maintain ethical standards in an era of data scraping, and why Luc built a version of his software that even his dad could use.

This edition’s a little late because we visited London Tech Week and the AI Summit. It was a busy week! As always, scroll to the bottom of the page for your round-up of the latest links and updates – including the news that AI Gamechangers will soon be making an IRL appearance with an all-new AI Gamechangers Summit in Helsinki (8th October). Join us!

Luc Schurgers, Replikant

Meet Luc Schurgers, co-founder and CTO of Replikant, a platform that combines game engines, LLMs, and real-time rendering to bring interactive AI characters to life.

With a background in visual effects, viral advertising, and real-time graphics, Luc has spent years building tools that blur the line between animation and conversation.

Replikant’s tech can power everything from classroom co-teachers to interactive avatars in virtual streaming environments. In this conversation, he shares how the team is designing a more accessible future for AI-driven digital storytelling.

Top takeaways from this conversation:

Replikant enables real-time interactive storytelling using AI avatars that can talk, remember, and perform in group scenes, turning traditional chatbots into conversational sitcoms.

The platform supports multiple LLMs, voice systems, and custom characters, so users can personalise their experience (or build enterprise-grade AI tools on top).

Ethical production is a core value, with all generative elements editable by humans, and no use of scraped, unlicensed data.

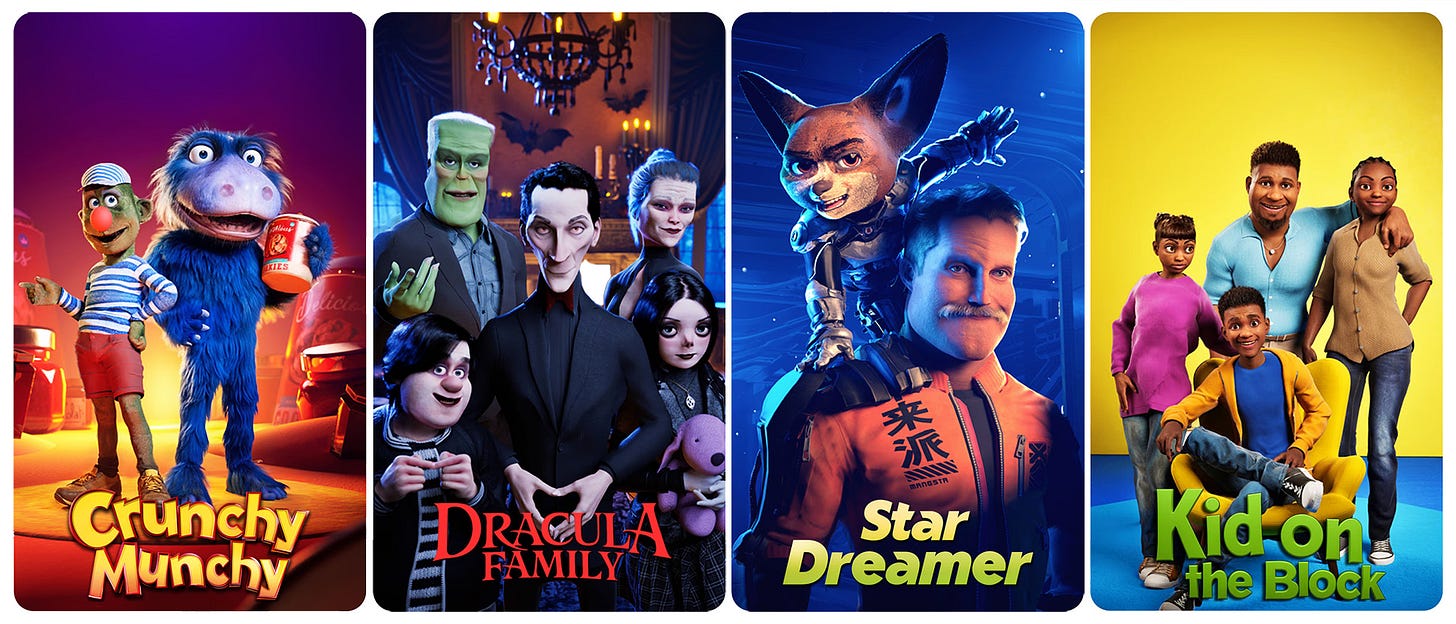

Applications range from education to entertainment, with school partnerships already live and a “Netflix for chatbots” experience coming soon to browsers.

AI Gamechangers: What’s your background, and how did Replikant come about?

Luc Schurgers: I have a diverse background. I studied multimedia and visual effects. I was really into early 3D animation. I ended up working in Soho [London], in the visual effects industry. In the early 2000s, I had a great time living in the UK. But when you dream about working in production in big visual-effect films, you don't imagine you're going to be this tiny little cog in a huge machine – so I didn't like that much! You’re so far down the chain of command.

I wanted to do something else, and I met with a couple of directors who advised me to make a music video. I began with a bunch of animated music videos. One thing led to another, I was signed by a production company, and I started making advertising commercials. I got a sponsorship to come out to the US, but the same thing happened – I was working with a big company. Then the 2008 recession happened, so there wasn’t much work, so I started my first production company.

“We see it as a ‘Netflix for chatbots’: visual, interactive, and more fun. You’ll be able to load up your own AI character, have real conversations, and share those experiences with others”

Luc Schurgers

I've always had one foot in the tech world, and I've always enjoyed doing digital campaigns. I did a lot of out-of-home projection jobs or installations, built full-on club activities for Heineken. I did a good 10 to 15 years of running around the globe doing all sorts of advertising campaigns. And then at some point, I realised I had developed so much software, it would be good to pack it all into a framework and make something.

However, it doesn't really work like that, you know?! If you want to make something serious, you have to go full-time and treat it differently. I had already been working with game engines. And for one project, we had made our own game engine, and I realised this is where the future of animation is. What I really like about animation as a creative, is that you can think of anything. No world is too weird – the world is your oyster in terms of any crazy concept. What I hated about it was the pace of it, and always needing a huge team. You're locked into something and can’t be spontaneous; you can never see what happens “on set”. There’s no messing around. I figured I could make something that would change that.

It’s been about six years now that we’ve been working on this. We started out building automation tools, using machine learning to optimise workflows and make things easier for content creators. Even back when IBM Watson was still a thing, we were already integrating that kind of tech.

The platform we built is fully procedural, which is one of the coolest aspects. It means that whenever a new technology comes along, we can slot it in somewhere. So when LLMs really kicked off, we immediately thought, “This is going to be awesome!” and began implementing them, knowing very well that the first iterations would be too expensive for consumers. But we timed things quite well, especially with Replikant Chat. Now, you can have an amazing, affordable experience.

One thing we did struggle with hasn’t necessarily been on the AI side – it’s been about compute. We've always wanted to run in the browser. And when you're developing to a five-year roadmap, you have to try and predict the future, in a way. We expected more to shift toward cloud compute for real-time graphics, but that went in a different direction. AI compute isn’t naturally geared toward offloading real-time 3D, but that's now being solved as well. Within the next month, we'll have a running browser version.

What's the special magic that makes Replikant what it is?

The technical pitch is that we're a visualisation layer for basically any kind of data.

If you have, say, sales data, you can put our platform on top of it and interact with it in a much more engaging way. The characters are personalised, they have persistent memory, and they can trigger rich media like videos and screen shares. It’s a much more interesting way of communicating with LLMs than just using plain text or voice. And it’s really fast as well.

We also support up to five different characters at once. So it’s no longer really a chatbot – it becomes something like an interactive TV show or an interactive sitcom. We’ve found that using at least two characters, especially in educational settings, really helps keep the conversational flow going. It creates a whole new kind of experience.

“Anyone in their right mind wouldn’t have trained on copyrighted works. They’ve hoovered up the creative output and knowledge of humanity. We try to practice AI in an ethical way, and that's all you can do. It's a shame that bad actors have tarnished the name of technology”

Luc Schurgers

From a non-technical perspective, I’d say what we’re building is basically real-time 3D TV shows. We’ve rolled it out at a few schools now, and seeing how kids interact with it is fantastic. In fact, that’s where I got the idea of the “interactive TV show” from – they described it as “Cartoon Network, but they talk back!”

That’s Replikant Chat. And it’s built with Replikant Editor, which is kind of a branched-off version of it. With the Editor, you can build your own chat modules and customise every single part of the Replikant Chat experience.

We're using a custom engine based on Unreal 5.5, but it basically ships as its own editor, so it's not an OEM distribution of Unreal. It's a fully rebuilt editor that’s running all the time; it's simplified, faster and tailored. Unreal is a bit like a Swiss Army knife, so when a company implements it, they take a part and refine it, and we did that for real-time chat and animation (but keeping all the goodies of the Unreal rendering pipeline).

Please tell us about some of the partnerships you’ve got. You mentioned education, but are there entertainment clients too, and other business use cases?

We’ve always been quite consumer-focused first. That’s how we rolled it out. But of course, to make revenue, we also do enterprise work.

One really interesting project we’re working on is a revamp for a well-known brand. It’s kind of like a virtual video store. Think Netflix, but with an avatar in the middle that gives you a really personalised experience.

In education, we’ve partnered with a big school organisation in the US – they work with about 1,200 schools. They spent around six months testing the platform, and officially started in March, running two classes per week with it. The chatbot acts as a co-assistant alongside the teacher, and it’s working really well.

“Everything is about inference speed – that's important! It’s about finding the right balance. The best voice clone might not be the fastest. And that trade-off applies across everything”

Luc Schurgers

We’ve built a kind of RAG-style system, so teachers can drop in class materials and prep the chatbot ahead of time. That way, when they’re presenting the lesson, everything is smooth. It works in a group setting, but the great part is that students can revisit it individually later – all the information is still there. That kind of flexibility is showing a lot of promise.

We’re also building out more classroom-style modules, including digital literacy for kids and safety education. That last one’s particularly interesting because we’re working with a few major platforms that haven’t always had the best reputation on the safety front. It’s nice to bring some positive change there.

And beyond that, there are all sorts of personal applications. For example, I’m building a chatbot for my own kid that knows everything about RC cars. There are so many little components to figure out. You can imagine similar use cases for travel, hobbies, all kinds of things.

We’re also experimenting with some really cool integrations, like combining Spotify and Alexa. We’re working with Amazon to properly integrate with the Alexa pipeline, and also with their cloud team to get everything deployable in the cloud. That’s still in alpha, but it’s going to look pretty cool.

Right now, you can actually try Replikant yourself – it’s already available to download on Steam and Epic. But we’re aiming for a proper v.1 release sometime mid-summer.

Are you using a particular foundation model right now? We assume Replikant can work with any of them, but what’s currently powering it?

We implement all of them. Because we have a procedural approach to everything we do, that flexibility extends to data ingestion, too. We can easily switch between models or even assign different ones to different characters.

I did a really fun test. It’s up on my YouTube, where I had OpenAI and DeepSeek both being interviewed for the role of history teacher at a hypothetical school. It was interesting to see how the models interacted. Sometimes they complemented each other, and other times they’d try to shut one another down. You can put everything in there, and it all works.

The same goes for voices. We support Microsoft, Whisper, Murf, Cartesia, ElevenLabs, Google – basically, on the editor side, you get a drop-down menu and can pick whichever service you want. Behind the scenes, we’ve also built an in-between dashboard that monitors and converts credit usage across providers.

You’re not just processing speech and text. You’ve got animation and lip sync in the mix too. What technical challenges did you face there?

Everything is about inference speed – that's really kind of important! It’s about finding the right balance. The best voice clone might not be the fastest.

And that trade-off applies across everything. Take images, for example. We use a bunch of open-source models, but we often end up falling back to Flux Fast, just because it delivers the speed we need. We use that for textures, images, anything where we need that kind of instant result.

“We’ve partnered with a big school organisation in the US. The chatbot acts as a co-assistant alongside the teacher. The great part is that students can revisit it individually later – all the information is still there. That kind of flexibility is showing a lot of promise”

Luc Schurgers

Lip syncing actually happens locally, so it’s not that much of a slowdown, but that said, it could be better; it's something we can improve on. But again, it comes down to priorities: where do we spend our development hours? For example, Epic is working on some really promising lip sync tech, and it looks great – but it’s not real-time yet. So we're constantly balancing and seeing where to spend our time.

Let’s talk about ethics. A lot of people see the benefits of AI, but there’s also concern – about copyright, for instance, and job losses. You’re right in the middle of all this. What’s your stance on the ethical use of AI, and how do you respond when companies raise those concerns?

To be honest, I find it flabbergasting to witness the amount of theft firsthand. When you’re developing in this space, you see it clearly. These models get built, then fenced off, so you can’t outright [copy] – but with some clever prompting, [people] can still get around it.

We all come from games, visual effects, animation: we respect the craft. So in Replikant, everything is either licensed or homemade. That’s been our approach from the start. We focus on production, and we want this to be safe for production.

And we’re not trying to automate everything away. Personally, I’ve tried prompting, and yeah, the first few times it’s great because you get this instant feedback, but you’re not really making something. So, in the editor and our animation pipeline, what we provide is a way to speed things up, not replace the creative process.

We have a rule: whenever generative AI is used – whether it’s to generate a script, analyse a scene, pick a camera angle, animate a moment, or write a line of dialogue – every single one of those actions must be editable. You have to be able to go in and change it manually. We feel like the craft is important.

I don’t think it’s good or fair that people’s work has just been scraped and digitised and put out all over the world for anyone to use. And it’s a real shame that this behaviour has become synonymous with AI use. The tech itself is great. But it’s the Silicon Valley mentality – this obsession with getting there first, then defending your position with legal teams – that I don’t like.

Anyone in their right mind wouldn’t have trained on copyrighted works. It’s ridiculous. And now they’re saying we should just abandon IP law altogether? I think that’s disgusting, honestly. They’ve hoovered up the creative output and knowledge of humanity.

“The characters are personalised, they have persistent memory, and they can trigger rich media like videos and screen shares. It’s a much more interesting way of communicating with LLMs than just using plain text or voice”

Luc Schurgers

So yes, we try to practice AI in an ethical way, and that's all you can do, really. Hopefully, it will get sorted at some point. But it's a shame that this majority of bad actors have tarnished the name of technology.

Tell us a bit more about your roadmap. What’s your vision for Replikant’s future?

Right now, the biggest focus is the browser rollout – getting Replikant Chat running smoothly across platforms. That’s the core of what we’re working on.

In the background, we’re also building out easy chatbot customisation. Within Replikant Chat, it’ll be super easy for anyone to create their own bot, with their own character, their own data, even their own voice if they want.

I'm really taking my dad as an example here. He’s a 75-year-old birdwatcher living in the Netherlands, and I’m designing this so that if he can do it, then a lot of people can. That’s the goal: make powerful tools accessible to everyone.

Simultaneously, we’re keeping Replikant Editor compatible with Replikant Chat. So, for people who want to go deeper (the enterprise crowd, people who want full access to models and advanced tools), they can still do all that in the Editor.

We see it basically as a “Netflix for chatbots”: visual, interactive, and more fun. You’ll be able to load up your own AI character, have real conversations, and share those experiences with others. And of course, we’ll moderate it.

All of this is coming together soon. We’re talking about the next three months for all this. After a long time, we’re finally getting close to launch.

Further down the rabbit hole

Some useful news, views and links to keep you going until next time:

Mattel announced a partnership with OpenAI to bring AI into its toy brands (including Barbie and Hot Wheels). It’ll bring AI-powered experiences to both physical products and digital content later this year. It marks OpenAI’s first major move into the toy and family entertainment sector.

Ex-Netflix VP Mike Verdu and Zynga founder Mark Pincus have formed a startup called Playful.AI in San Francisco to “reinvent game development and game experiences with AI”.

The Anbernic RG557 retro gaming console received a significant update, which bolts in a bunch of AI tools. There’s a chatbot to help answer game questions and a translation service.

The SAG-AFTRA video game strike was suspended last Wednesday, then on Thursday, it approved the tentative agreement with the video game bargaining group on terms for the Interactive Media Agreement. The contract is now with members for ratification.

Stefano Corazza, who we interviewed about the use of AI to aid UGC creation, has gone and quit Roblox.

The team at mobile gaming giant Scopely has been having fun with AI music.

Unveiled last week: GRID turns esports data into real-time storytelling with an AI-powered broadcast layer.

Market intelligence startup Creativ has used LLMs to analyse over 1.5 million Reddit, YouTube, Discord and news conversations about the world’s top 17 game publishers.

AI Gamechangers readers: come and join us as we host speakers and panellists live on stage! On Wednesday, 8th October, at Wanha Satama in Helsinki, Finland, the first AI Gamechangers Summit will take place in conjunction with PG Connects Helsinki. Tickets are available, and we’re open to speaker submissions.