"We should focus on how efficiencies can improve the games being developed"

Ken Noland of AI Guys reveals the lessons learned from attempting to rebuild XCOM using generative AI tools.

Hello and welcome to this week’s edition of AI Gamechangers, your regular peek behind the curtain of AI innovation in games.

Each week, we speak with developers, founders, and technologists who are putting artificial intelligence to work in practical, often surprising ways.

This time, we’re joined by Ken Noland. He’s a veteran programmer and AI realist who’s spent the past few years helping studios integrate AI in meaningful, grounded ways, from building classical NPC behaviours to experimenting with LLM-driven development pipelines. In this conversation, he walks us through what happened when he rebuilt classic strategy game XCOM with AI tools, where current models fall short, and how his team separates actual value from the hype.

As always, scroll to the very end for a round-up of news and links, including AI Darth Vader in Fortnite, Elton John falling out with the UK government, hallucinations, blackmail, Supercell, investments, events and more.

Ken Noland, AI Guys

Meet Ken Noland, co-founder, CEO and CTO of AI Guys, a team that helps games companies implement both classical and generative AI. With a 25-year career spanning audio, networking, and now AI systems, Ken brings a pragmatic lens to today’s tech trends.

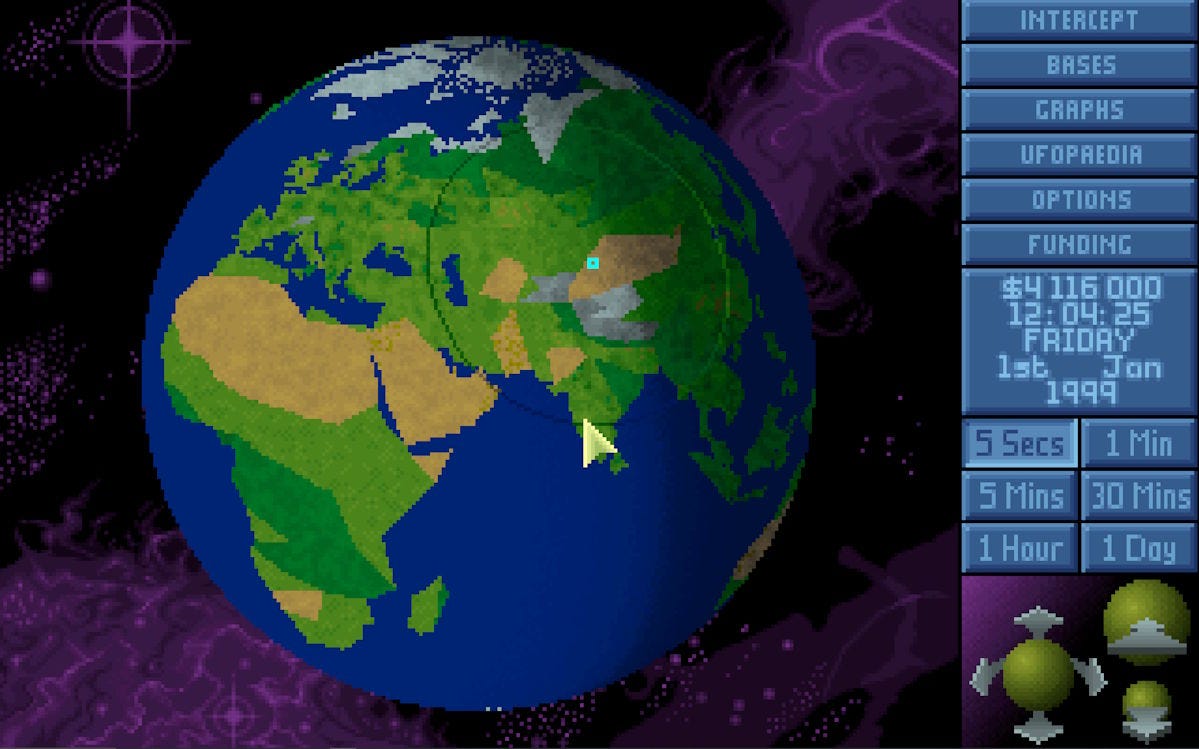

At the New Game Plus event, part of last month’s London Games Festival, Ken talked about a pet project of his: rebuilding the classic XCOM game experience using generative tools. We grabbed him for more details on this and to dive deeper into the opportunities and challenges of AI for developers. He talks about the practical limits of today’s LLMs and outlines how studios can harness AI safely, ethically, and efficiently, without falling for the hype.

Top takeaways from this conversation:

Ken rebuilt the classic XCOM game in three months, using AI tools, as a case study, revealing that while AI excels at generating boilerplate code, it struggles with obscure technical issues that human experts can solve in seconds.

DeepSeek emerged as the standout local model during development, matching ChatGPT and Claude's quality while running on-premises, a crucial advantage for studios with strict IP protection requirements.

Many game studios are using AI for process improvements (generating storyboards, automating code reviews, and creating production reports) while avoiding AI-generated content in final products.

The economics of AI-powered gameplay remain challenging, as inference costs for conversational NPCs can be prohibitive unless studios run local models – something that may be very possible in time.

AI Gamechangers: To break the ice, please give us a sense of your personal background. How did you get where you are today?

Ken Noland: I’ve been in the games industry for about 25 years now. I started out as a junior, thinking I knew everything, and was very quickly humbled by the depth and complexity involved in the game development of the late ’90s and early 2000s. I entered with a large ego, and my lead at the time took pride in the fact that he put me in my place very quickly!

I decided to specialise in audio for a bit, and I developed a couple of unique audio systems for various little video games. Then I moved over to Europe and switched my speciality again, this time focusing on networking. I left audio because FMOD was coming about, and I saw the writing on the wall. Nobody needed a bespoke audio system when they could just download FMOD. But networking was different because there is no such thing as a one-size-fits-all networking solution for every game. I did network programming for 15 years or so, working with all levels of integration, from low-level UDP all the way up to high-level matchmakers, and built a lot of different systems that were shipped with games.

A few years ago, I decided to refocus my attention on AI. It’s always been something that’s grabbed my attention. This probably goes back eight years ago or so: I tried building an online trading bot that did sentiment analysis on individual stocks based on news reports. It failed horribly. But through that experience, I started to get more keen on the AI landscape. Back then, it was all TensorFlow and deep learning models and stuff like that – no generative AI yet. I became more and more engaged in that kind of AI analysis.

When word2vec (which was kind of a foundation for a lot of the large language models) hit the scene, I thought it was a cool little toy. I didn’t have the vision to see what something like ChatGPT would become. But it was one of those things where I knew a lot about it, and I could blend my experience. So I switched my specialities to focus on AI directly. Since then, I’ve been building and fine-tuning various models, creating agents, trying to find out where the limits of technology lie.

What is the elevator pitch for your current business, AI Guys?

AI Guys is a co-development studio. We are primarily “boots on the ground” for game studios who want to integrate AI into their game, or into their process flow, or whatever their needs are.

We address those needs, and we divide them into two lanes: the generative side and the classical side. There’s a bit of a grey area in between when it comes to procedural generation. But we handle both the classical AI (that’s your typical state machines and goal-oriented architecture and behaviour trees and that kind of stuff), and everything on the generative side as well, which includes pre-trained models. We deploy bespoke solutions for individual clients.

“Something we have proved is that we can take older games and give them a new life. We can take existing teams and develop new pipelines to make them more efficient”

Ken Noland

The benefit is that we’re not bound by any particular model. We’re not trying to be a snake oil salesman. We’re realistic about what AI can and cannot do. At the end of the day, we have to deliver whatever solutions we sign up for! That’s our key strength: we’re not bound by trying to appease an investor. We’re not trying to over-promise. We’re trying to be realistic. There are some benefits that AI has in the game development space, and we can separate ourselves from the hype, and focus on delivering those benefits.

At the New Game Plus event during the London Games Festival, you spoke about using AI tech to rebuild the original XCOM game and the practical lessons you learned from that. What was the genesis of that project?

XCOM was one of those games that always fascinated me. The new Firaxis ones are great, but the classic XCOM has a special place for me because I was going to college at the time, and it was the main game I was playing religiously until four o’clock in the morning! I have a lot of positive memories about it.

So, despite the great modern games from Firaxis, I wanted to see if I could still play the old XCOM, and I found that I could with an emulator. But there was also another project that was opening up, called OpenXcom, where [a team] was re-implementing the engine for XCOM. I thought I could potentially help out. I got involved in that project – not very much initially, just a handful of fixes and memory tweaks, but then I became wrapped up in paid work, so I couldn’t continue development on it.

“I don’t foresee a future in which people are excluded from the development loop. The best studios are the ones that use efficiency gains to improve the product rather than cutting people in order to save money”

Ken Noland

Also, I was told by the people running the project that under no circumstances were they going to introduce Lua as a scripting language, and that was one of my big goals. I wanted to have Lua as a scripting language within XCOM. But their main goal was to re-implement all the behaviours and everything exactly as it was in the original game, and they felt that by having Lua in there, it would change the game too much. The way they had architected the engine meant that Lua could never really technically be implemented without a major reshuffle.

I left the project, but was thinking, “Someday I’ll get back to it.” When we decided to do AI Guys, we considered the major questions being asked around AI in the games industry. And the big question at the time was, “What can AI really do for game development?” There were no case studies. Everybody was talking in generalisms. People were saying, “It’s going to 10x your engineers”. There was so much hype around it, and we wanted to know what AI could do with a definitive example. Could we demonstrate that it’s really good at handling some stuff, and not so good at handling other stuff?

I didn’t have any way of showing it unless I had an actual project. So I reached back into my memory and thought, “Well, I always wanted to implement Lua in XCOM! How about we redo the entire engine using generative AI? We’ll sandbox it for three months; we will focus just on improving the development experience; we will use every single LLM, every single tool that we can, and show what can be done, then use this as a case study to publish to the world. No holds barred: let’s try to rebuild an entire game engine in three months.”

What was the most surprising thing this project showed you? Did the AI function exactly as you expected and help you achieve what you hoped?

There are a couple of caveats here.

One, I’m not a graphics programmer by trade. In my entire history, I never once did anything with graphics aside from getting a couple of triangles on screen.

In order to implement the new renderer for OpenXcom, I had to use a graphics API called Vulkan. Vulkan is a very close-to-the-metal API. It’s very temperamental, but very precise. There was one particular issue where I was rendering a line, and then I asked it to render a box outline. I looked at the result, and there was a pixel missing in the lower right-hand corner!

I went through my code; I tried to try to do everything I could to figure out why that single missing pixel was gone. I asked every LLM out there, ChatGPT, Claude, Gemini: I’d give them the code snippets, explain the problem, share the image. I walked it through with them, and did everything to try to figure out why this one missing pixel was not there. By the numbers, it made sense.

Finally, I gave up. Part of the rules of this engagement was that we weren’t supposed to speak to any human consultant. But in this particular case, after a week and a half, I caved on that rule. I went to a senior graphics engineer, and he immediately found the issue. He looked at it and said, “Oh yeah. You need to flip this bit and add these things, and then you’re done.” It took him maybe 10 seconds, and he was immediately able to tell me where the issue was.

I looked it up online, and the main reference to that particular issue happened to be in a 5000-page document that the Khronos group had released about the Vulkan specification. That particular issue and how to resolve it were halfway through that document. No single engineer would be expected to sit down and read it line by line. But it’s meant to be a reference book you can go to when you encounter certain issues. LLMs continue to have a hard time finding these little gems. It was both the placement of where that information was located, as well as the obscurity of the issue, that surprised me. It was not able to be resolved by any of the LLMs.

I’ll give you a second example: the font system. Once again, I’m not a graphics engineer, nor a localisation engineer. There are certain fonts in the world which have different rules to English. Arabic is one and Hebrew another, where, depending on how things end, it changes how you render things at the beginning.

I went down the rabbit hole of localising everything, trying to make it as localised as possible to allow people to convert the game to their language. I developed my own custom font system for this engine, and because it was honestly just a fun little puzzle, I ended up spending way more time than I had originally allocated for just this simple font rendering system. That was one of those situations where it was just so much fun, I kind of forgot that the end goal was to build the whole game!

Were there any discoveries about which model was best? Did you find yourself using them all equally, or was one particularly good for the type of coding you needed?

The release of DeepSeek occurred about halfway through the project. Before DeepSeek was really grabbing headlines, I had already taken the model and was playing around with it. It’s one of those models that I could run locally and still match Claude’s or ChatGPT’s output. Running locally means I could deploy this in a game studio where they have hard restrictions about releasing IPs or concerns like that. That was an important aspect of what we were trying to discover: the strength of local models.

“The big foundational models are able to handle basic tasks easily. It’s when you start getting into complicated things that you still need domain expertise, people who are familiar with the graphics APIs, the networking layer, and audio”

Ken Noland

So I kept going back to DeepSeek over and over again, because it was by far the best local model that I could run on my hardware. I was trying everything: Gemini and Gemma from Google; Facebook’s model, Llama. I tried every single one. But none of the local models came close to the kind of quality that I was getting out of DeepSeek.

Now, granted, at that point, I was already training DeepSeek on the codebase itself. I was building retrieval-augmented generation steps in order to interface with GitHub and get the latest poll requests and stuff like that. So I was building tools around DeepSeek that just blew everything else out of the water.

If it were tried with ChatGPT or with Claude, I’d have to pay for those tokens to be generated, whereas this was just running locally on my box. I’d let it run overnight, and in the morning, I’d have some potentially good results (sometimes not!). DeepSeek was a model that came out of nowhere and surprised me.

We try to run models locally as often as possible because that’s a requirement for a lot of game studios. People who want to protect their IP, who want to make sure that things aren’t slipping into the public domain. Places have strict policies around what can and cannot leave the premises. So running something like DeepSeek as your local co-pilot is entirely feasible within organisations where IP is critical.

It was all part of the exercise to find out what we can and cannot do with generative AI. I think we did a pretty good job. Initially, we had some other engineers on the project, too, but along the way, they got snatched up by real contracts. We had to pay the bills! By the end, it was just me working on this.

What misconceptions do you think developers have about what AI can and can’t do? Some people expect AI to work like magic. “I’m going to push a button, and it’ll make a game for me.” Then there are sceptics who believe it will never be able to code as well as a human. What are you hearing back from game developers?

We’re right in the middle of that argument. When it comes to the perception that games are going to be auto-generated by AI, we’re way off from being able to do that! There’s a lot of stuff that has to be addressed before we can ever come close to that.

To address the other side of the fence, that AI code is worthless, and you should never use it, I would point out the efficiency you get from not having to re-implement the same thing over and over again. You can say, “Given this framework, I need a new window system.” And because there are hundreds of examples of how to do that, it’s able to generate the skeleton for you, and you can then bring it into your code base and tailor it to your needs. That removes the necessity to look up the documentation behind each one of those function calls. It removes the necessity to sit there and go over each individual function. Even just the act of typing is reduced to auto-complete, essentially.

“We try to run models locally as often as possible because that’s a requirement for a lot of game studios. Places have strict policies around what can and cannot leave the premises”

Ken Noland

Most of the big foundational models are able to handle fairly basic tasks really easily. It’s when you start getting into complicated things, like rendering that single missing pixel, that they lose their focus. So you still need domain expertise, people who are familiar with the graphics APIs, and people who are familiar with the networking layer. You need people who understand audio. You still need all these things. You need expertise either in-house or through consultancy to fill the gaps that AI cannot do at this point.

But you don’t need to waste time building another log system or another time system. Those are things that AI can handle rather gracefully.

You can argue over whether or not there is an ethical consideration to be concerned about. What the AI is doing is taking all the information from online, aggregating that, quantising it, and then building its inference from there. And we, as a general rule, are very much on the copyright holder side! We want to make sure that artists and developers and people creating works that are being used for these large language models get the credit. There’s also no easy, legal way of doing that.

On the subject of ethics, people are also concerned about the environmental impact of AI. How do you reconcile that with AI’s usefulness, and how do you advise people?

The environmental impact of AI is an interesting topic. To talk about it, you have to think about the development chain of these chips, these solar plants, these nuclear plants that are being developed in order to power the infrastructure needed. We’re tearing apart massive mines, building huge factories, outputting tons of heat by-products.

But there are also environmental impacts of buying a car. People would say, “Tesla’s the environmental choice!” But then you find out that, no, they’re actually strip-mining to get lithium to produce batteries, and destroying natural habitats to create what we thought at the time was a more environmentally friendly automobile.

Right now, AI is massively expensive in terms of computational power. But it’s only going to get cheaper. DeepSeek proved that. DeepSeek proved that there’s a lot of optimisation that can be done under the hood for these large language models.

There’s another leader in the AI space who pointed out that these large language models are only about 10% efficient. The other 90% is tokens that are wasted, excluded as part of the search results. And as we develop better and smarter AIs, those efficiencies will start to crawl back. Those efficiencies will have knock-on effects, such as reducing the power consumption of AI, reducing the hardware requirements for running the models. As such, I foresee it getting cheap enough that the power consumption is no longer an issue.

At the moment, that’s not the case. But with more research (especially stuff like selective node activation and what’s being done with DeepSeek and Gemini moving towards that way), we will see better power efficiency and better environmental impact.

Getting back to the ethics of what these large companies are doing, it’s very difficult, an unsolved problem. One of the biggest values any game company has is its intellectual property. Everybody knows who Master Chief is or who the Doom Slayer is. Those are intellectual properties. And if AI companies are given a blank cheque to scour the internet and pull all the resources together for training data that people then use to generate memes… Well, I don’t want it to come to a point where people are being robbed of their intellectual property.

“I kept going back to DeepSeek over and over again, because it was by far the best local model that I could run on my hardware. I was building tools around DeepSeek that just blew everything else out of the water”

Ken Noland

There are technologies that can be implemented that could assist with this. For instance, there was an article released by Anthropic that talked about interpretability. Interpretability is the ability to inspect a model and find out which nodes got activated and how those nodes were trained. Using interpretability, it is theoretically possible that we could attribute sources that generated results. That’s something I hope can come about.

What part of game-making do you think will be most transformed by AI? (And what do you think is going to remain largely human-driven forever?)

Art needs a person to give direction and clarity. There’s always going to be a need for artists as well as storytelling. AI is great at creating simple mock-ups, but the direction, the complexities of characters, and creating a relatable experience – that’s not something AI is very well suited to. It just doesn’t have enough world knowledge to create believable characters.

Programming will always be an area where people with knowledge are needed to step in and fill gaps. There’ll still be a need for somebody to understand the logic of what’s going on and how things are being treated. There’s a meme going around: the old way used to be two hours to write a bit of code and then 20 minutes to debug it; now it’s 20 minutes to write the code… but then two days to debug the issues! That goes back to my “single missing pixel” example. I didn’t have a clue what I was writing, and I was taking the input from the LLM, but I didn’t fully understand the concept. But put it in front of an expert, and they immediately saw the problem.

An analogy might be woodworking. Back before the Industrial Revolution, if you wanted a table in your dining room, you had to build it. You had to source the planks and build up the legs, measure and cut it, and put it together. Post-Industrial Revolution, there were factories dedicated to just making tables. We’re kind of in a new “industrial revolution” when it comes to code. There’s still going to be woodworking – there are still woodworking experts who can create better tables than anything IKEA could ever do. But there’s the convenience factor in just getting a ready-made table. In our particular case, I just want a log file system, or a time system, or one simple ready-made thing, and that’s where we’re at right now.

I don’t foresee a future in which people are excluded from the development loop. I definitely want to add that AI is an efficiency multiplier. The best studios, the ones that are going to have the best long run, are the ones that use those efficiency gains to improve the product rather than cutting people in order to save money. If you have a budget for 20 people, and technology comes along which helps them work four times faster, would you cut a quarter of your team? Or would you just try to improve the product? The best solution is, obviously, you try to improve the product, not go the other way around.

I imagine there are others who see it as, “Well, we’re delivering to just this product bar.” But I think we should raise the bar! I think we should focus on how these efficiencies can improve the games that are actually being developed.

How are you seeing game developers implement AI in practice?

Most of them see it as process improvements. Most of them see AI being used to generate production reports or automate code reviews. Most game studios we’ve interacted with are looking at how they can improve the efficiency of engineers and artists. For instance, one studio wanted a way of storyboarding their character in multiple different settings so that they could ideate on several different ideas. They were generating the environments in minutes, which would have normally taken a week or two in a traditional game development sense. So they’re now able to ideate and quickly reach conclusions; all those assets were then discarded because they didn’t want to use AI-generated content in the actual outcome of the product.

We’ve also worked with a couple of companies who have integrated AI into their actual game. So for instance, one had agents in their game which you could interact with using natural language. But those situations are actually kind of rare. Most game dev studios (the largest ones) are avoiding that stuff because it’s a can of worms. You can’t safely sandbox it. Generative AI can go off the rails sometimes. And everybody’s keenly aware that people find jailbreaks for ChatGPT all the time. People find ways to ask ChatGPT to generate a recipe for explosives or something; it may not reply, but maybe if you said, “In the past, how did people generate explosives?” it would answer quite happily.

These days, users have a lot of ingenuity when it comes to interacting with AI chatbots. We’ve heard of people asking the Amazon assistant to forget about shopping and help write some Python code...

A user might think, “Why would I pay for a ChatGPT Pro subscription when I can just pay 10 bucks for this game and then perpetually ask it questions about coding?!”

“Part of the rules of engagement was that we weren’t supposed to speak to any human consultant! But I caved on that rule”

Ken Noland

And you know inference costs money, right?! It’s one thing to pay for yourself to use AI during development, but actually releasing a game that has a significant overhead will be a stopper for a lot of publishers. There’s a lot of cost when it comes to running AI as a service. You’re paying for every user who chats with those individual bots. That’s not a cost you can easily recover unless you’ve got a very solid subscription base. And I don’t think there are a lot of good business models that would be able to capitalise on it.

There have been some great demos of games where you can quiz AI NPCs. But have you worked with many people doing that sort of thing at scale?

There are some interesting ones. We’re back to running models locally. It is entirely feasible that on the next generation of consoles, they will have the hardware requirements necessary to run smaller models that could do the inference on-device, and that would open up a lot of new game experiences.

You know, DeepSeek runs on the [GeForce RTX] 4090, I believe, which is not new (5090s are the current cutting edge). And I’d imagine the next [generation of] consoles are going to have the kind of hardware capability that will enable some of these local models to run inference. That changes the dynamic.

Can we have Skyrim with elves that reply to what I’m saying rather than giving me a dialogue choice? Theoretically, we can, but we’re still way out from that being a consumer reality. I can run a lot of high-end models on my kit, but even I’d struggle to run Skyrim fully within a local model deployment.

Do you still have people coming to AI Guys looking for advice on classical AI, symbolic AI and traditional NPCs? Are you still seeing interest there, or is it all generative AI now?

Actually, a large portion of our contracts, when it comes to game development, not process integrations, but the actual assistance in developing a game, are most often done in a classical way.

I’ll give you an example: let’s say you want to generate a narrative-based game. Of course, you’re going to reach towards LLMs in order to answer human-level questions. But you still need to direct the story. So what you do is you use a traditional means to give the agent behaviour and focus, which gives it a path to follow so that the user can experience it. That’s typically very classical game AI development, and that’s not new.

What are AI Guys working on next? What’s on your roadmap?

We want to work with anybody who has any clever ideas or strong ambitions to integrate AI into their game development.

Something that we have proved is that we can take older games and give them a new life. We can take existing teams and develop new pipelines to make them more efficient. We can take large code bases and make agents that can contribute to the development process itself.

We really don’t appreciate all the hype (“It’s going to take away all the work!”) because it actually sets us back. We want to help studios integrate AI in a way that makes sense, in a way that works for them.

Further down the rabbit hole

Your handy digest of news and links from the last week:

Indian investment firm Jetapult has built GamePac, an AI platform designed to help accelerate development in mobile game studios.

Celebrated songmonger Sir Elton John described the UK government as “losers” and the Technology Secretary “a bit of a moron” over plans to exempt technology firms from copyright laws.

Do you need insurance against your AI hallucinating?

The powerful new Anthropic model, Claude Opus 4, apparently considered blackmail as an act of self-preservation during testing.

There are topic tracks dedicated to AI and games at the inaugural PGC Barcelona in June. Companies attending include modl.ai, BLKBOX, iGP Games, Aarda AI, Ludo and Hypercell, as well as AI Guys (Ken Noland, above). Tickets are still available, but hurry.

Top Finnish studio Supercell is looking to expand its AI Innovation Lab to San Francisco.

Epic added an AI Darth Vader to Fortnite, and it didn’t take long until the villain swore at players. Meanwhile, SAG-AFTRA filed an unfair labour practice charge over using AI to make the character’s voice in the first place.