"You need to differentiate classical AI and generative AI in games"

Anna Moss of ii Connection discusses recruitment, ethics, and crucial distinctions between types of AI roles.

Welcome to the latest AI Gamechangers, the weekly(ish) newsletter bringing you conversations about the practical role of AI in the games industry. Check out the archive to read them all for free.

Today, it’s the turn of recruitment. We’re speaking with Anna Moss, a specialised recruiter at ii Connection (part of Carbon & Finch), where she primarily handles recruitment for Microsoft D365 roles, games development positions, and AI-related talent.

Scroll to the end for the latest AI games news, including the Intelligent Streaming Assistant promised by Inworld AI, Logitech’s Streamlabs and NVIDIA; Razer’s Project AVA gaming copilot; the World Economic Forum’s employment report; new startup Studio Atelico; Goat Gaming’s Telegram AI agents; and loads more.

Anna Moss, ii Connection

Meet Anna Moss, an IT talent specialist at ii Connection and a recruiter specialising in the games, AI, and Microsoft D365 sectors. She is an ambassador for Women in Games, an ambassador for the World Ethics Organization, and a member of Women in AI.

She works across multiple sectors, including generative AI startups and game studios, while testing JeffreyAI, Carbon & Finch’s in-house tool system. Her philosophy is grounded in practical realism. Although she's very used to working with AI, she is sceptical of hype. She advocates for clear distinctions between different types of AI and emphasises the importance of human oversight in AI-assisted recruitment processes.

Top takeaways from this conversation:

AI roles vary significantly by industry. What companies mean when they say they're "hiring for AI" can vary dramatically. In games, it could mean either classical AI (gameplay/NPC programming) or generative AI, while in other industries it often simply means Python developers with data science experience.

While there is demand for AI talent, it's balanced by ongoing layoffs in the sector. Many projects may fail or face practical difficulties.

Companies aren't discussing AI ethics out of altruism but necessity, largely due to unclear regulations and potential risks. This is particularly evident in creative sectors like games, where concerns about copyright and job displacement are prominent.

Some candidates actively avoid AI-focused roles for ethical reasons.

AI Gamechangers: First of all, please tell us what you do.

Anna Moss: I work for ii Connection, which belongs to Carbon & Finch. Carbon & Finch is a Microsoft D365 consultancy - finance and operations specific to the [Microsoft Dynamics] 365 field.

They also have JeffreyAI, a separate company that they own. I use it as a custom ATS [Applicant Tracking System]. In reality, JeffreyAI began as a sales and marketing automation platform, but they’ve created a space specifically for me on the whole CRM platform for ATS. So I’m just basically a test rabbit for them.

I recruit people in various sectors for Carbon & Finch. Internally, I do D365 roles. For JeffreyAI, I do development roles, product management and everything else needed for a platform like this. Externally, I work with generative AI startups and games clients. Gen AI is not real AI, in my opinion, but a fun AI. I work with game studios, for example, for AI roles, as well as general programming roles on Unreal or custom engines, graphics roles, or anything to do with programming. I cover all of them.

When companies say today that they’re looking for AI talent, what does that actually mean?

It really depends on the company, the setup and exactly who they need. I had a client in the oil and gas industry, and they were building an AI team to build solutions internally for their engineering and day-to-day administrative tasks to make everything else easier and better. They’ve got a Head of AI and Machine Learning in Aberdeen. What he was looking for was not necessarily experience in the oil and gas industry but experience with AI products for various industries and experience in working in teams like this. Plain and simple, he was actually looking for Python developers who understand data science! And they built a prototype.

“There will be jobs popping up, but it’s not going to be with established teams. It’s going to be with something new. I would be on the lookout for jobs in the indie space, in the mobile space, and in smaller teams”

Anna Moss

Most of the solutions that I see would require Python as the main skill, and experience with large language models. Because I work predominantly as a Microsoft Partner and also closely with Tools For Humanity, it means they probably want experience with ChatGPT. Sometimes it’s Copilot. You can’t really call Copilot a large language model; it was there before ChatGPT, and it’s basically automation. It’s a solution that is plugged into your laptop and lets you do a lot of different things rather than just using a large language model. But it has a chat function, and that’s what people often get confused about. A lot of people think that Copilot is a large language model like ChatGPT.

If we’re talking about games, the roles that I would cover when we touch upon AI specifically would either be classical AI, which is gameplay, or it would be generative AI within games, which means those people will be building tools that animators or graphic programmers or anybody else can utilise. It’s basically an AI solution for a game studio.

A good example could be BARB. They’ve got very good talent in researching and building internal AI solutions and testing a lot.

It depends on if we are talking about classical AI; then it’s going to be a programmer in games, with either custom engine experience or Unreal experience. If we’re talking about generative AI, that’s going to depend on what solution they’re trying to build – is it just going to be a plug-in for ChatGPT? If yes, then it’s probably going to be a Python developer.

AI seems to be getting a lot of investment at the moment, and many companies are calling themselves AI companies. Is there a demand for more talent in this space?

There is a demand, but I wouldn’t say it’s unmanageable or very high.

[Things are] shifting back and forth because of the layoffs. What I would call “demand” is when you actually don’t have enough talent, and you need to train more. From a recruitment perspective, there is a lot of movement, a lot of applications and a lot of candidates. I would put it this way: demand is equal to the layoffs.

There are a lot of companies that are able to hire themselves, internally. Even if it’s a startup, it works much better than, say, four years ago. Every hiring manager can now get a recruiter seat on LinkedIn and find people pretty quickly.

I wouldn’t say it’s the case that you need to switch from games to AI, for example. If you do that, you should be aware of the fact that while we do see a lot of investment in AI, and a lot of companies are trying to integrate generative AI in their solutions, there are going to be a lot of flops as well. It’s inevitable; that’s just how the world works. When you’re trying to adopt something new, you think you need it, and then after a year of investment, you discover it’s pretty difficult. “We’ve got a CTO, and now we’ve got a team!” Well, actually, it’s not manageable.

“That would be my main message to everyone. You need to differentiate classical AI and generative AI in games. Classical AI is gameplay. You need to understand how game design works”

Anna Moss

And we’ve seen that happening already in industries that try to adopt AI faster. It’s very complicated. Second, if you try to adopt generative AI, you have giants in the industry already! If you try to plug any solutions into those giants, you will be depending on the prices they propose, on the updates that they run. Right now, it’s working with Chat GPT 3.5. Tomorrow, they will say, “You all have to switch to 4.0.” You should be very careful when you’re switching to the “generative AI” solutions industry because there are a lot of projects that will be unsuccessful, projects that will be difficult or slow-burning or over-invested and then overblown. And obviously, layoffs there are inevitable eventually, too.

So, kinda, yeah, there is demand! There are a lot of people working in AI, and a lot of hiring is happening… but then a lot of firing is also happening already.

A topic that comes up a lot on AI Gamechangers is ethics. I know that you promote ethical recruitment practices. AI is such a transformative technology, people are worried about losing their jobs to it; they’re worried about the training data; they’re worried about copyright. Do you find that companies increasingly want to talk about AI ethics?

They don’t really want to talk about ethics – they have to. The reason they have to talk about ethics is because there are no regulations. The first regulations kick in this year. The EU Act that we had, and everything else, are very, very vague about what you can and cannot do. We’ve got high-risk things that you can build or lower-risk things. What all of this means, nobody has any idea, until you actually do it and get into trouble.

That’s why people inevitably have to talk about some framework that they can work within. That’s why ethics comes into play, especially when we talk about copyrights and infringement. We’ve got a lot of solutions that are being built, especially when it comes to art (video, AI related to video, or anything with audio AI), so they have to talk about it. They talk about it, and they try to work within those frameworks that they build themselves.

If you talk with ISO, for example, they do have something that they are trying to put out there. When it’s related to AI regulations, it’s all very new. I don’t really believe it is actually working so far because, obviously, there are a lot of tools that have been built and been used already that I would call infringement, and another person wouldn’t call infringement. That’s where ethics basically kicks in, and that’s why they talk about it, not from the kindness of their own hearts.

Let’s talk about your own use of AI at ii Connection. Do you use AI-powered recruitment tools internally? What tools do you find yourself using day to day that are AI-powered?

Everything that we’re using is plugged back into JeffreyAI because, as I said, I’m like a rabbit on which they are testing everything! Because it’s my ATS, and they can build whatever I ask them to and plug it in.

“They have tools that they use on a daily basis that are already powered by AI. The software they’re using is already two steps ahead. But a lot of people don’t want to work with it because of ethical reasons. People, especially in creative sectors, have an awareness”

Anna Moss

Most of the things that I asked them to plug in would be related to time-saving. For example, there are tools on the market that listen to your call and transcribe it live, and then you can save that transcript or you can ask questions about that call. I have asked my team to build something similar, and that’s what they’ve built within JeffreyAI. It deletes the recording straight away, which is, according to our dev team, a GDPR-compliant and ISO-compliant regulation. If you’re keeping the transcript, then it’s the same as if you were taking notes. Nothing is being recorded, but it’s being transcribed.

We’ve got a scoring system as well. I cannot use it to reject candidates, for example, because it’s high risk according to the EU AI Act. But I can use it as a scoring system to prioritise candidates rather than to reject them. I’ve got benchmarks for the role (benchmarks are not the same as a job description). The job ad is something that the candidate sees; a job description is very full, and it’s been discussed with the hiring manager. But benchmarks are literally just sentences of exactly what the hiring manager needs and is looking for as a standard. It would have maybe 10 or 15 benchmarks per role. It would compare my call with a candidate’s CV and anything else that I put in the notes about the candidate, and then it would score them from one to 100 and prioritise them. This way, we prioritise the interview: who’s going to be at the first stage of the interview first and so on.

We can use it for job applicants as well, but we found it very untrustworthy and not useful, per se, because it only compares the CV to the job description. This just doesn’t work correctly. The best candidates I’ve had in games, for example, don’t really fill in their CVs. They just say, “I worked at Splash Damage for three years as an AI programmer, then I switched to Firesprite for three years. I can do Unreal.” And this is all you can see on the CV. Obviously, that’s going to be a lower score than somebody who’s been working only for a few years but has put a lot of keywords in the CV, and then that automation tool will prioritise that candidate first. It’s just not fair. It doesn’t really work efficiently. You still need a recruiter to have a look at it and check every time.

We also found [problems] in other disciplines, outside of games (in the D365 sector, for instance). Do you know about company CVs? People can have their personal CV and company CV. A company CV lists all the projects and information on everything you’ve done. It’s probably five or six pages long and literally tells you everything about their work experience there. A lot of candidates take that company CV and use it for job applications because it’s quite handy. It’s just very long. Those CVs get the highest scores because they are so long. We could use that for CV matching and scoring, but we found it ineffective and not fair.

“They have to talk about ethics because there are no regulations. The first regulations kick in this year. The EU Act is very vague about what you can and cannot do. That’s why ethics comes into play, especially when we talk about copyrights and infringement”

Anna Moss

Another automation tool we’re using is the sales and marketing system on JeffreyAI. It’s not really AI if you ask me; this is just an algorithm, simple mathematics on the back end. People can call it AI, but I call it automation. It can give you suggestions on emails to send, messages to send, and things like that. But so does Copilot, too. If you’re using [Microsoft software] on your laptop, are you actually using AI on a daily basis? You can say you do, but I say you don’t.

The most important [thing], I would say, is our transcription and the matching system. But again, it’s all supposed to be human checked. You can’t rely on it fully.

Creatives working in games are threatened by AI. Perhaps it’s going to replace them? We’re back to the ethics. Are you finding that there is a pushback? Are there people who, during the recruitment process, say they don’t want to work with AI at all?

Yes, definitely, especially if we talk about design, art roles, animation, VFX, and so on.

They do have tools that they use on a daily basis that are already powered by AI. The software they’re using is already two steps ahead, and they are already plugged into generative AI there – they are already using generative AI.

But a lot of people don’t want to work with it or don’t want to work with AI startups, for example, because of ethical reasons. Of course, they do. That’s one of the hiring struggles that you have if you’re hiring for AI-powered game startups. People, especially in creative sectors, have an awareness. Fundamentally, they are absolutely correct because we don’t have strict regulations and policies in place just yet. I completely agree with them regarding their ethical framework.

I had a client in the Middle East. They had a military AI solution that was very, very difficult to recruit for, especially on a remote basis, because there are a lot of politics involved. But this is like a “maximum” of an ethical question! In games, at least, it’s not so maximised. People don’t turn around and start sending me political messages about games and how they are utilising their art and everything else. They could have! They don’t, but it’s much harder to hire for AI solutions in the military rather than in art in games. But still, people have their own ideas of what they want to work with and what they don’t want to work with.

Games is a huge business, worth some $188 billion. Is games a significant part of your portfolio now? Is it where you mostly work?

Not really! In the past, my background was in recruitment and games, and in 2023, the demand for game roles externally for agencies went down rapidly. Even though we had a lot of layoffs and a lot of hiring in 2023, recruitment teams internally in game studios were still managing it themselves. They started the first push that we felt. External services, like recruitment, were the first ones who felt that something wasn’t quite right. Once it started going down in January 2024, that’s when I started expanding into other industries.

The most demand I have right now is D365, because I do internal roles in Carbon & Finch. Next would be AI, and games would be [third].

Also, a lot of contracts that I pick up in games end up filling roles internally, or something doesn’t go quite right with the project’s funding. That’s my experience in games lately. There’s much more movement than in 2023, but it’s not very successful so far. We still don’t have enough work for it to be worth paying an external agency. But there are still jobs, and the agency’s still working, and we’re still profitable, and it’s still a good market. There’s just much less demand, I would say, for external recruitment.

Generative AI has taken over the conversation. But that’s not the same as machine learning, traditional AI in games, and so on. Is there anything that you wish people entering the games industry now knew about AI? Are there any misconceptions that you think we should talk about?

That’s a very big problem in games. People need to start differentiating [types of] AI. People coming into the games industry don’t really get that just yet. A lot of people apply to my jobs that have nothing to do with generative AI or Python or anything else. They still apply for these jobs, thinking, “Oh, it’s a role in the games industry; I worked in the automotive industry, so I can work in games and AI.” And I’m like, “No, this is classical gameplay AI. It’s got nothing to do with what you’re doing.”

I would say that would be my main message to everyone. You need to differentiate classical AI and generative AI in games, because now there’s more generative AI in games appearing and popping up, and it becomes much more difficult to understand what is going on.

Classical AI is gameplay - everything to do with NPCs, gameplay, and the behaviour trees. You work closely with an engine. You need to understand how game design works because you will be very closely related to game designers.

“If you try to adopt generative AI, you have giants in the industry already! If you try to plug any solutions into those giants, you will be depending on the prices they propose, on the updates that they run”

Anna Moss

Generative AI is supposed to be somewhere peripheral, like how we perceive roles in DevOps in games. A good example would be network programming. Network programming is a solution for a game, and very often, it branches out and becomes its own discipline. Look at multiplayer games. Beautiful networking at its core. One of my clients is The AI Guys. They’re actually differentiating it very well: classical AI for gameplay and then generative AI. That’s how I understand it.

What are your hopes and fears for the year ahead? Do you think that the games industry has got a positive year ahead of it, or are we still facing headwinds?

I speak to so many internal recruiters, and I don’t feel like anything good is coming yet. I’m sorry, I have to say that! The reason is that when I speak to internal recruiters, I can feel that they don’t have enough internal work just yet. We’re not even talking about me helping them with recruitment. They themselves fear for their own jobs because there’s not much movement going on, and a lot of things are being delayed or cancelled.

There will be jobs popping up, but it’s not going to be with established teams. It’s going to be with something new. I would be on the lookout for jobs in the indie space, in the mobile space, and in the smaller teams. This makes it much more difficult for candidates because that means they can’t go on normal searches or job boards; they have to be on the lookout on LinkedIn instead because hiring managers are hiring either through references or posts. It is going to get better, but it’s not going to be much better. It’s just going to be a little bit different.

Further down the rabbit hole

What other AI-and-games news has been happening this week?

CES 2025 was last week, bombarding us with tech announcements. For instance, Inworld AI, Streamlabs (Logitech), and NVIDIA announced a collaboration to create an “Intelligent Streaming Assistant” this year. The system combines Streamlabs' streaming tools, NVIDIA's ACE digital humans and computer vision, and Inworld's gen AI to create an interactive co-host and producer, which can deliver commentary on gameplay and handle the production aspects of broadcasting.

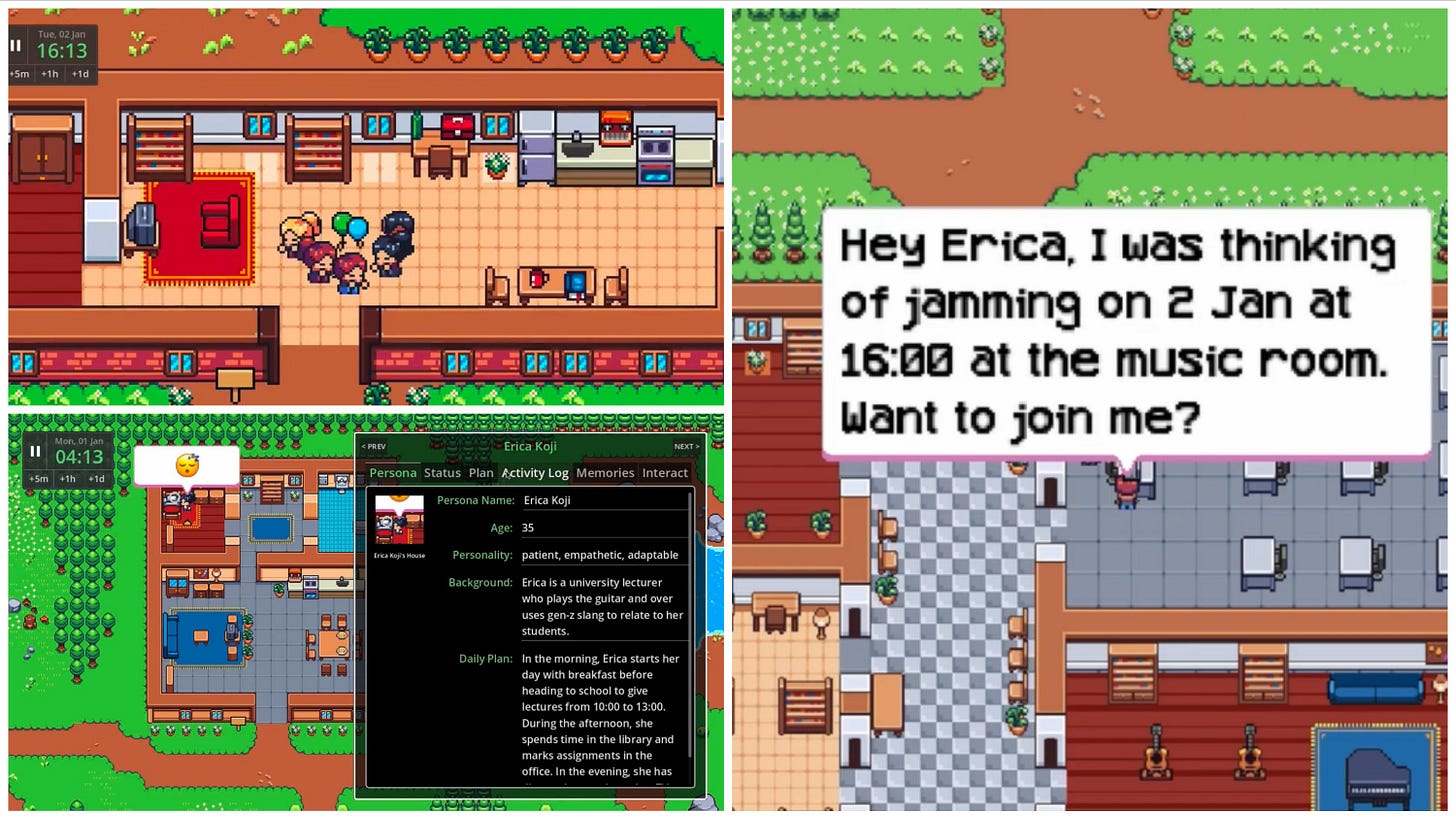

There was so much NVIDIA news from CES that we can’t cram it all into your inbox, but of interest was the company’s partnership with Krafton on Inzoi. Inzoi is a Sims-style life simulator, and NVIDIA’s AI tech will populate the game with smart characters who adapt and respond to the world according to their personalities.

Also at CES, hardware maker Razer announced Project AVA, an AI coach and copilot which will provide real-time expert advice on how to excel at the game you’re playing. This has split journalists, with it being described variously as the best announcement and least favourite announcement of the show.

According to a World Economic Forum report, while AI may eliminate 92 million jobs by 2030, it's projected to create 170 million new positions (a net gain of 78 million jobs globally). The emphasis is on roles requiring AI expertise, cybersecurity skills, and technological literacy.

Next week, PG Connects and the Big Screen Gaming Summit will come to London. There’s still (just) time to get tickets. Anna Moss, interviewed above, will be a speaker, as will Filuta AI’s Filip Dvorak. And you can read an interview with him about “why generative AI is not the holy grail of artificial intelligence and why composite AI may offer developers even greater opportunities” over at PocketGamer.biz now.

The new season of Mythic Quest begins streaming on Apple TV+ on 29th January. The comedy series follows a fictional video game studio, and it seems in this fourth season that the hot topics for satire will be UGC and AI. Watch the trailer here:

Telegram gaming platform Goat Gaming, operated by Mighty Bear Games, has announced the launch of AlphaGOATs. These AI agents can play Telegram mini-games and create content to earn revenue for their owners. The agents will launch on 6th February as part of Goat Gaming's broader AI integration strategy, with the company's platform reportedly serving over 5 million active users since its 2024 launch.

Studio Atelico, a new startup founded by veterans from Uber, Meta, and Creative Assembly, has announced an on-device AI game engine. It aims to make generative AI accessible and affordable for developers by eliminating the need for expensive cloud solutions. Studio Atelico demonstrated its capabilities with a tech demo (called GARP) featuring autonomous AI agents running in real-time on consumer hardware. We’ll be interviewing these folks in a future edition of AI Gamechangers.