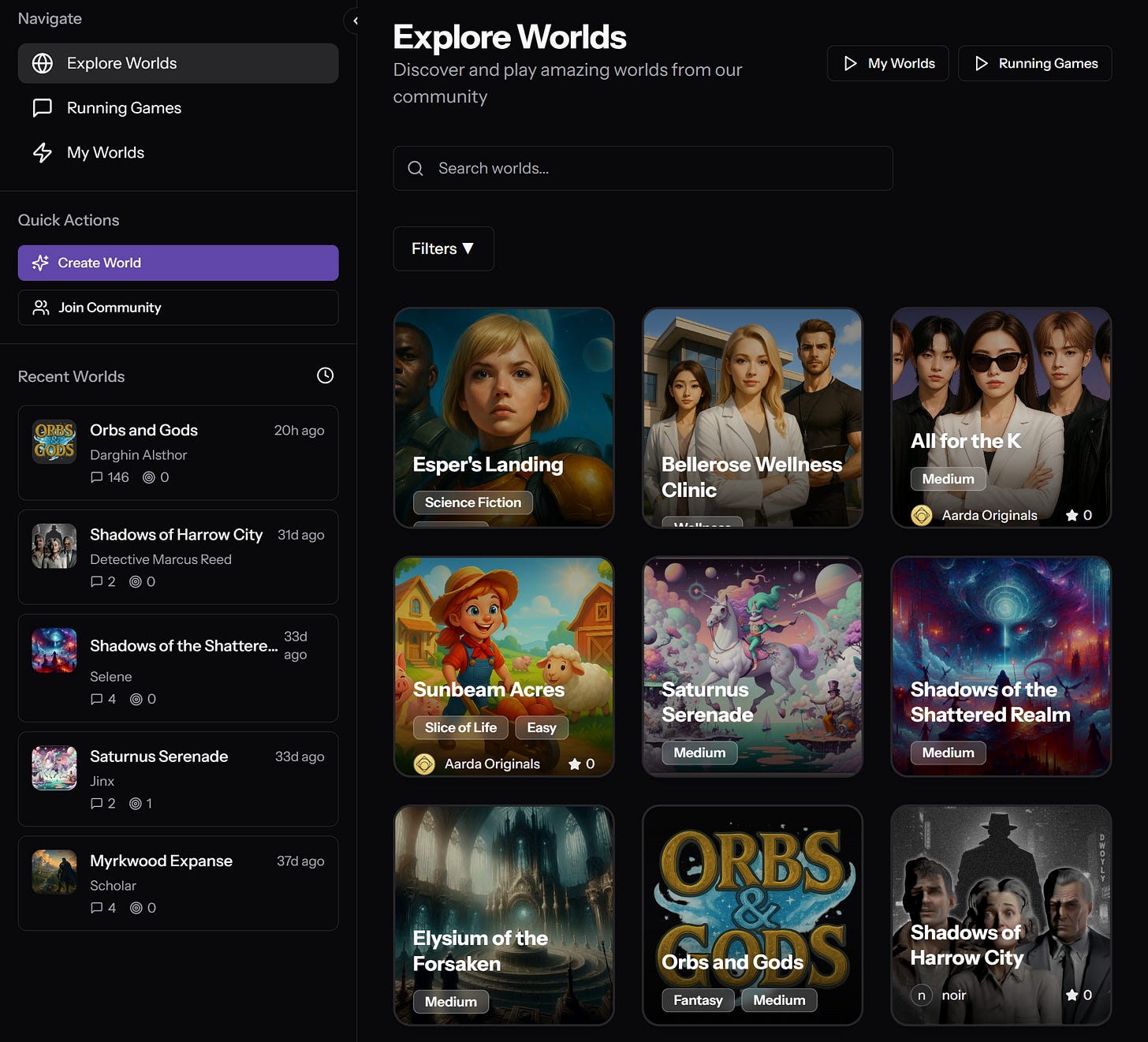

"We keep the human in the loop – it’s a platform for self-expression"

Robert Edgington on ethically training AI to jam with you in a game where players can become rockstars.

Hello! Welcome back to AI Gamechangers, the newsletter where we explore how generative artificial intelligence is reshaping the games industry from the inside out. Each week, we chat with founders, developers, and creatives who are putting AI to work in practical and surprising ways.

This time, we’re diving into a new kind of music game with Robert Edgington, the founder of Record Games. His mission? To build a social, mobile-first platform where anyone can experience the thrill of being a musician, powered by AI that can jam, critique, and collaborate like a virtual bandmate.

As always, scroll to the end for a burst of AI-related news from around the games industry, including the latest events, disputes and investments.

Robert Edgington, Record Games

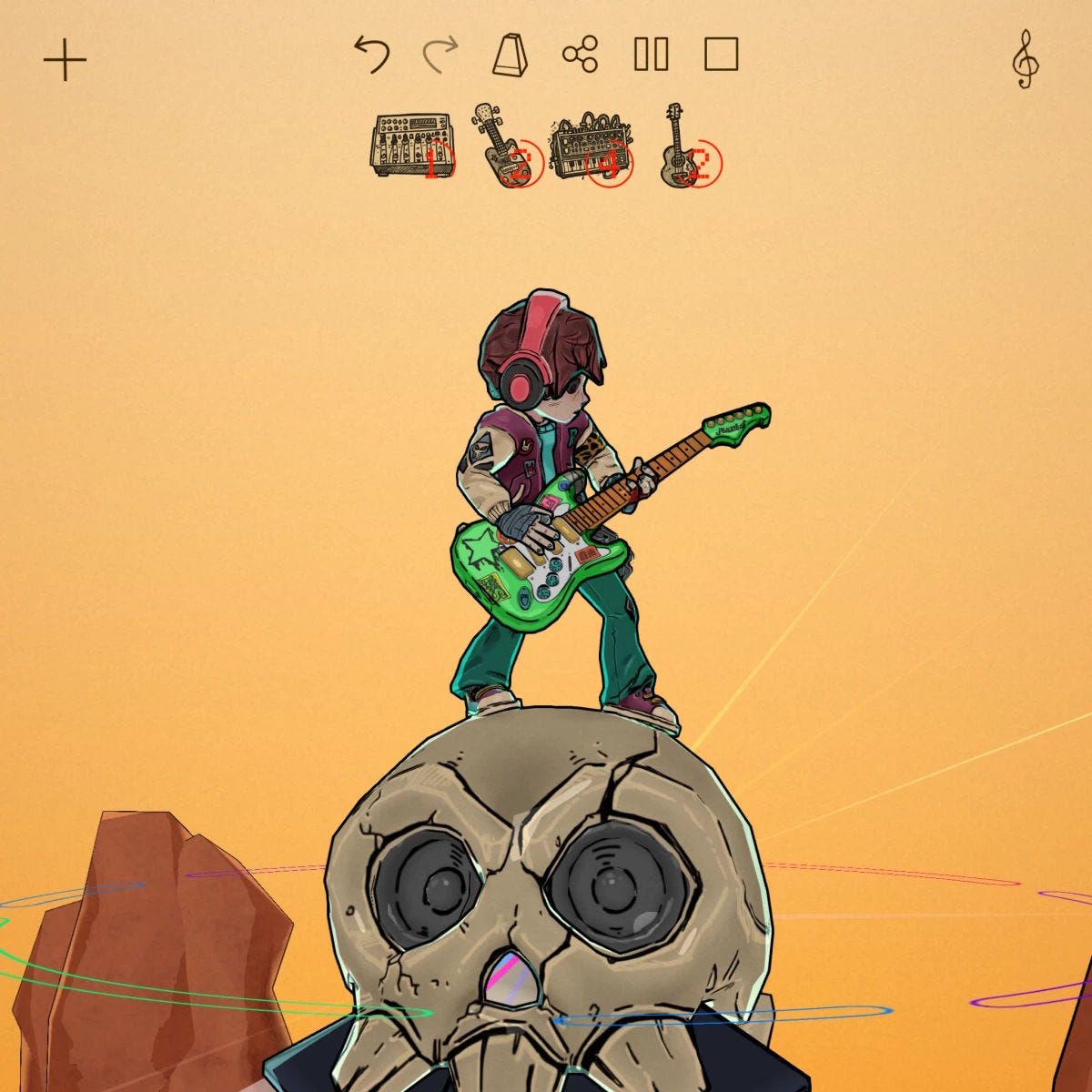

Today, we chat with Robert Edgington, founder of Record Games and creator of Starstruck Galaxy, a music game where players write, perform, and share original tracks, with a little help from AI bandmates.

With a background in neurotechnology and physics, Robert brings deep technical expertise to a playful new format: a game where AI session musicians can finish your song, and NPC judges rate your track based on gameplay data.

In this interview, he reveals how he’s building ethical music models, designing an ever-evolving musical universe, and turning players into creators.

Top takeaways from this conversation:

Starstruck Galaxy blends music-making and gameplay, encouraging users to write original songs and compete in “battle of the bands”-style events.

AI powers in-game bandmates and music critics, helping players overcome creative blocks and generating real-time feedback based on gameplay data.

The game uses symbolic (MIDI-based) models and retrained versions of AudioLDM to create interoperable, editable, and copyright-safe content.

Robert’s team is building ethical, game-native AI models, with human input at every stage, enabling player creativity without infringing on music rights.

AI Gamechangers: What's the elevator pitch for your business?

Robert Edgington: We're a studio based near Worthing [England], and we're making a social music game for mobile.

The thing that makes it different as a music game is that you make original music. I want people to be able to share and then validate that fantasy of being a real musician.

There was this cultural movement 10 years ago with games like Guitar Hero and Rock Band, and they did a great job emulating a fantasy of holding a guitar in your hands. But I think if you want to try and create a music experience in 2025, you need to do it for real.

So we're integrating original music-making with a social platform, and charts, and a “battle of the bands” format, to allow people to have that feeling of getting a number one in a game, and sort of do what Instagram did for photography, but for music.

The AI comes in as we bring that “musical metaverse” to life with AI NPCs that will jam with you. We're really using AI to endow that progress from a game perspective. It can get you over those creative hurdles and remove the friction of trying to finish a song (or maybe even start).

You may have made half a song, put down a drum beat as a bassline. Then you can ask an AI “Jimi Hendrix” to come in, and they'll put down a screaming guitar part for you. It will fit your song perfectly, in key, in tune, in rhythm.

We're also training our own AI to generate MIDI music so it's fully interoperable. It means players can edit it, change instruments, that kind of thing. But we're not just training AI to generate songs, but to critique them as well. This is our secret sauce for the game, and it’s what will allow us to judge “battle of the bands” competitions with AI directly, without needing human feedback or needing to curate charts.

That's the plan, and then we're externalising those AI to the wider music industry as well. Our long-term plan is to go from an analysis engine to being something you can license.

“I have an ambition for it to be more than a music game – it’s a platform for self-expression, with community and social elements”

Robert Edgington

Our model will be that our game will create user-generated content and will have an End User License Agreement from which we can then train our own music AI, and externalise – it's an ethical way of training music AI without copyright infringement. We don’t want to be sued by people.

Professor Wenwu Wang at the University of Surrey is a world expert in generative audio AI. He made a model called AudioLDM: it's a popular model online. You can type in, “Give me a trumpet in a cave!” and it will make you that sound straight away. It's very cool. We've got a project to retrain on that, copyright-free – it’s a proper training library for infinite instruments within the game as well. It's another way to do a content pipeline; players can discover instruments as they go, and open a crate with a new sound, for instance.

What was the lightbulb moment when you realised you could use AI to achieve what you wanted to do?

My background isn't in games. I studied physics at university, and I ended up in neurotech. I co-founded a startup called Paradromics. They make brain implants for speech prostheses. I did the signal processing and ultimately worked on decoding music from the brain.

I left that company in 2020 because I wanted to do something creative. By then I had the AI skills, especially in music and signal processing, to tackle a music game.

“We’re saying, ‘Let's enable you to be the musician you want to be – and we’re taking out some of the grind along the way’”

Robert Edgington

If you want a character to be able to respond like a session musician, jamming with you, you could make a complicated procedural algorithm to do that. You might embed loads of music theory knowledge and spend millions developing it… Or you can train a generative AI that understands all of that and just works out of the box! If you do it right, it’s a natural fit for that role. In traditional AI, it’s like having a really good opponent in a shooter. It reacts to you naturally. In our case, it just happens that we're using a modern neural network, “generative AI”, to do that.

Please tell us a little about the underlying tech. How did you choose a model to work with, and how are you training it?

For the model where we're generating audio itself, waveforms, we're very much leaning on AudioLDM. The way that model was developed, it uses a combination of models. It operates in the latent space – generally, that’s a big trend in AI: compress down, distil into the latent space, and then make all your moves there, because they're a lot richer, and you get a lot more expressive power within there. AudioLDM did that for audio very effectively.

We use similar models for our AI bandmates and AI critics as well, leveraging a diffusion-based framework, but on the symbolic level. By that, I mean MIDI. Without getting too into the weeds, it's a good model to do it with. Diffusion models are commonly used in image generation, but you can definitely repurpose them for music, and they’re a good fit because they're probabilistic. It allows the model freedom to do different interpretations that are still correct, and it follows the distribution rather than being a right answer, because there isn't necessarily a right answer in music, like with images.

Let’s talk about your in-game music critics. Whether a piece of music is good is subjective, so how do you analyse it and give critical feedback using AI?

We're going to train it on engagement metrics within the game. For our initial library, we’ll use some previously created measures of how good a song is, compiled from chart positions, number of plays, and so on.

You could also give it a personal rating, if you wanted, but obviously, we don't really want to do that. It's not a very reliable measure, because a number one from Taylor Swift may not necessarily be the greatest song in the world! The field’s called “hit song science” and it’s why, when you train these kinds of models, you can't just rely on one of these metrics. You have to provide the model with all the information. Yes, it was a number one. Yes, it has millions of plays. Yes, it's also written by someone popular like Taylor Swift. Genre, energy, these kinds of things; if you condition the model with all that information, it can then learn to predict it.

Another way we're going to tackle it is by leaning into the subjective bias, by framing it purposefully as the bias of the NPC judges. For example, there may be a panel of AI judges with different tastes. This subjectivity will add to the gameplay, we think.

What is the degree of human input? Is it about making a complete song at the push of a button? Or is there flexibility for the player to edit and change each step?

We very much want a human to start [the process] and we want to keep the human in the loop. You'll have made a baseline; you add the guitar; but you can't handle the cowbell, so you need AI to do it for you. That's it.

“We'll be filling the worlds with lots of different sounds, so the players can record and sample them in their songs as well. You may see a bird on a tree, for instance, and you can add it in”

Robert Edgington

We’ll also use it the other way round. You approach an NPC on the Synthwave planet, and they're playing some synth already, and they encourage you to jam with them. There'll be a pre-generated song that you can then jump on and contribute to.

We will use it scarcely. There’s money to spend on the AI, which will need monetising, so we purposefully want to keep it scarce. The models are symbolic, though – they're lighter weight. Our aim is to actually get them onto mobile over time.

But also a reason not to overdo the AI is the fantasy of the game. If you're letting AI do it all for you, it's not really your song. We're not in the market to say, “Here’s a ready-made song to use as a backing track for your YouTube video.” We’re not an AI music company. We’re saying, “Let's enable you to be the musician you want to be – and we’re taking out some of the grind along the way.”

You’re building a whole universe for this music experience?

It's a live ops game, and it's called Starstruck Galaxy, and the Galaxy part comes in with planets joining every few months. So there'll be Mario Galaxy-style tiny planets, with music. Each one will be a larger-than-life caricature of a musical genre, with NPCs. We’ll make one every three months. They’re about the size of a Zelda village or two in terms of content. Quite light, but it's more about the emergent stories that the players will make through their music and the environmental puzzles we will be putting on those planets.

That part of the game is sometimes described as Pokémon-like, where you're going on this adventure to different lands, collecting instruments. You're challenging NPCs as you go. We'll also be filling the worlds with lots of different sounds, so the players can record and sample them in their songs as well. You may see a bird on a tree, for instance, and you can take that and add it in.

Your background was not in games. Do you think of yourself as a gamer? What are your other gaming influences, beyond what you’ve already mentioned?

I've always loved games. When I was 18, I went to Swindon library and took out a book on C++ for game development! But I was more of a scientist at the time, and really loved it, so I thought, “The games route isn’t for me now.” But there was always the siren call of game-making.

It got to the point in 2020 where it was never easier to start making a game. I had the AI skills and the startup experience to do it. I watched loads of GDC videos. I read loads of books, particularly The Art of Game Design: A Book of Lenses by Jesse Schell – I remember, I read the whole thing cover to cover. When I got to the last page, that's when I started. I thought, “Right now – let's make the game!”

In terms of game influences, there are those solo indies who make a game and produce art that I love. Now I’m living that story… and it's less glamorous! I play a lot of Overwatch still, although I can’t say there’s any hint of that in our game.

My main influence is trying to [overcome] my parental issues! I'm trying to prove what a game can be, and show that it doesn't necessarily have to just be run, shoot, kill. We're getting to a point in games because of AI where we can actually start to access these more nuanced parts of the human psyche and align them to different core motivational drivers. And that's what I want to make happen, and that's really what this game is all about: trying to appeal to the higher calling of being a musician.

Some traditional gamers are sceptical of AI, for a number of reasons. Do you think you've got an educational challenge on your hands when the game comes out?

For sure. I think there'll be a group of people we just won't convince. We're training it ethically, humans are in the loop, it's actually generating MIDI, but I don't think we're going to convince everyone.

“Diffusion models are commonly used in image generation, but you can repurpose them for music, and they’re a good fit because they're probabilistic. It allows the model freedom to do different interpretations that are still correct”

Robert Edgington

All of these things we’re doing, and the fact that we're adding NPCs, will give it character, and I'm hoping that will soften any negative opinions. I think of it as more like traditional AI in a game, rather than a modern, “generative AI game”. But it may be a hard sell to the public. There's something in the semantic space there – people never had a problem with the procedurally generated worlds of No Man's Sky, for instance!

What’s the road map? How far are you from launch?

We're still fundraising and aiming for a soft launch in mid-2026. We’re aiming for the full live ops launch, with licensed musicians, at the end of that year. I've got a working vertical slice right now.

I've been told many times I should have released it already! But I have an ambition for it to be more than a music game – it’s a platform for self-expression, with community and social elements. So we want to launch it with a lot more of those features in place. It's taking a little more time, but I'm really enjoying the journey.

How big is your team, and how are you approaching the commitment of maintaining live ops after launch?

I was a solo dev for the first two years. Then I was really lucky to have two great contractors come onto the core team: Bence Kovács and Gabriele Caruso.

Bence is a very talented audio developer who also has his own company called Playful Tones. He's all about creating playful music experiences, and he’s an expert at putting audio on mobile, so he's been a great fit for making the music engine.

And then Gabriele is an amazing artist who has a very cool punky anime style, which is what I wanted to go for. Inspirations were Gorillaz and [Scott Pilgrim].

And recently, we've been fortunate to have a marketing lead join, as well as a live ops director.

“We want to keep the human in the loop. We will use AI scarcely. A reason not to overdo the AI is the fantasy of the game. If you're letting AI do it all for you, it's not really your song”

Robert Edgington

What's been great is we've really benefitted from the support of Brighton University as well. So I was lucky to be able to host four art students, who helped make content for the game as part of one of their placements. And we’ve benefited from people just wanting to get involved who have contacted us to help, particularly on the sound design. Now we’ve got three guys helping like that. One of them said to me, “Oh, by the way, I'm an international beat boxer. Is that relevant?” That's very relevant!

What are your bold predictions about how AI could change the games industry? Does AI deserve all the attention it gets right now?

Games in general can't escape the gravity of generative story. Be it a battle royale or something with evergreen content where it just keeps on going – that sort of emergent gameplay is important. I think AI will be a natural companion to embellish that emergent gameplay, creating exciting variability and surprise.

We're going to see loads of LLM applications with clever ways you can interact with NPCs. But I think I'm more interested in people building foundational models for a specific objective in the game: content creation, level generation, player modelling. That will have a huge impact.

Further down the rabbit hole

Some useful news, views and links to keep you going until next time:

The SAG-AFTRA video game strike is officially over as more than 95 percent of voting performers supported a deal providing AI protections.

Were you at the RAISE Summit 2025 in Paris last week? It included the world’s largest AI hackathon: a cool €5 million prize fund saw 1,100 startups compete.

LangChain, the AI infrastructure startup providing tools for LLM-powered applications, is raising again and is looking at an approximate $1 billion valuation.

Artificial Agency (who we covered in March) has launched the alpha version of its AI behaviour engine, enabling studios to embed runtime decision-making agents into games.

Meanwhile, the AI cosy life sim game AuraVale, which AI Gamechangers covered back in November, has been put on hold.

Do coding tools actually slow developers down? AI research non-profit METR ran tests showing the pace of work declined by 19% when using AI tools (via AI debunker Emmanuel Maggiori).

If you enjoyed our recent chat with Replikant, check out their video about how to build an interactive game show host:

Tommy Thompson shares a great deep dive into the backlash against generative AI in big games, in his AI and Games newsletter: “When Players Discover You’re Using Generative AI”

AI was a hot topic at the recent Lagos Games Week, where speakers discussed the role of AI in Africa’s dev ecosystem.

The storytellers at Aarda AI are preparing to open Aarda Play up to its first 100 users next week. Starting on 16th July, registered Aarda users will have the opportunity to build their own world and instantly make it playable by everyone. Discord link for info.

With all this news about companies we’ve featured recently, we’re planning to revisit our AI Gamechangers interviewees from last year and see how things have changed for them. Watch this space!